多圖生成 Streamlit 應用程式:使用 TorchServe、torch.compile & OpenVINO 串聯 Llama & Stable Diffusion¶

此多圖生成 Streamlit 應用程式旨在根據提供的文字提示產生多個圖片。此應用程式不是直接使用 Stable Diffusion,而是串聯 Llama 和 Stable Diffusion 以增強圖片生成過程。以下是它的運作方式:

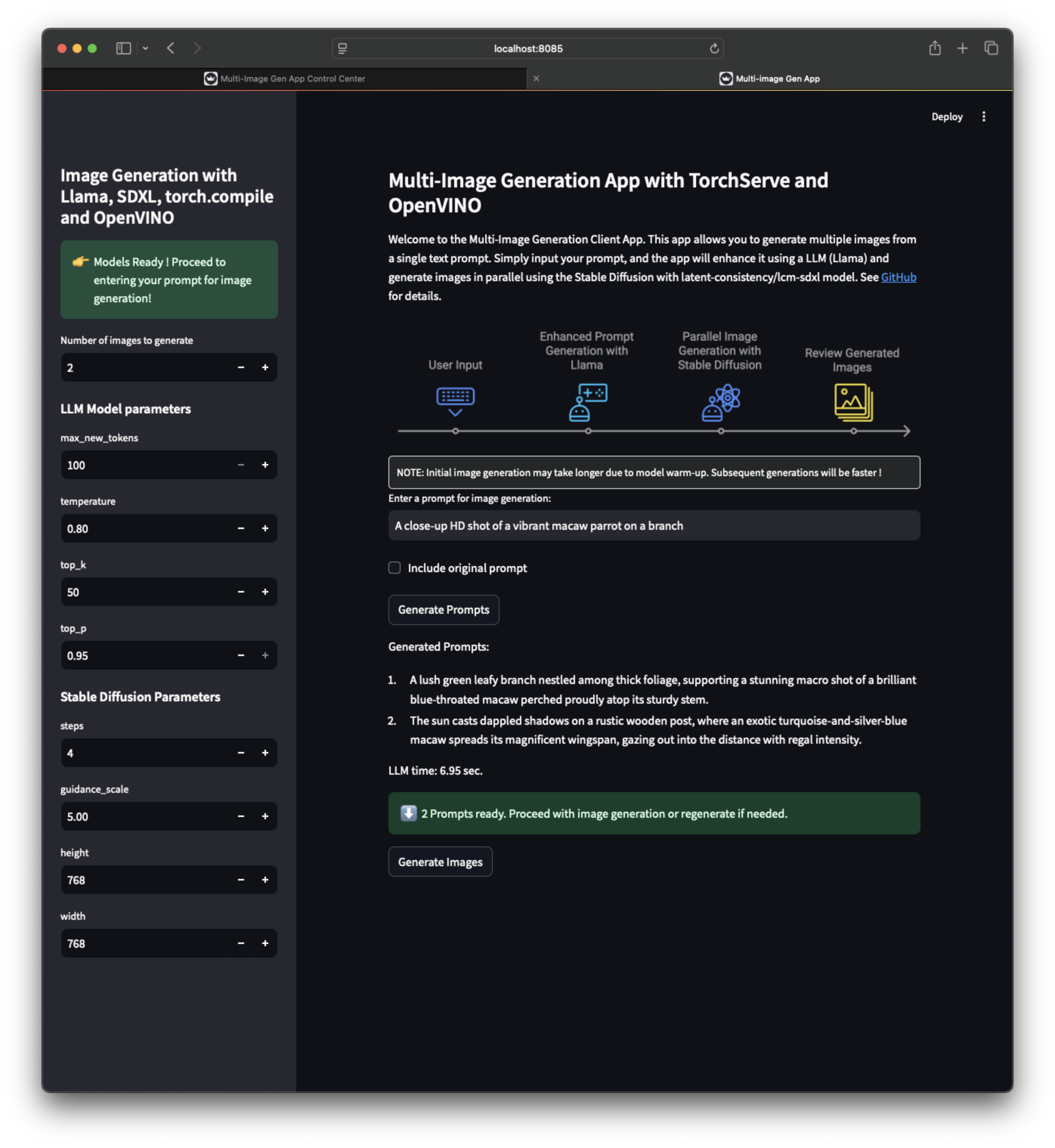

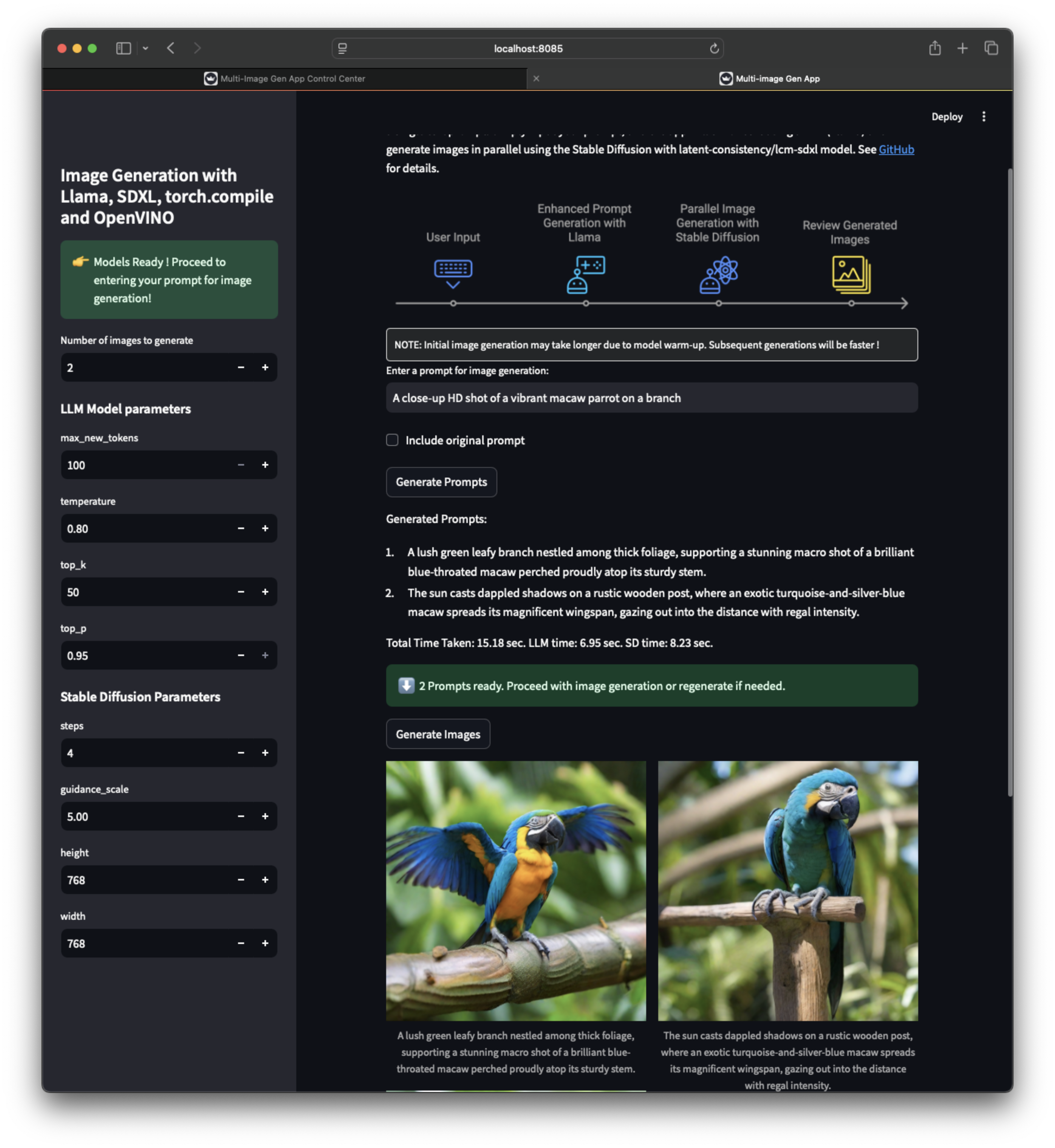

此應用程式採用使用者提示,並使用 Meta-Llama-3.2 來建立多個有趣且相關的提示。

然後將這些產生的提示傳送到具有 latent-consistency/lcm-sdxl 模型的 Stable Diffusion,以產生圖片。

為了最佳化效能,這些模型使用 torch.compile 使用 OpenVINO 後端進行編譯。

該應用程式利用 TorchServe 進行有效的模型服務和管理。

快速入門指南¶

先決條件:

您的系統上已安裝 Docker

Hugging Face 權杖:建立一個 Hugging Face 帳戶並取得一個權杖,該權杖具有存取 meta-llama/Llama-3.2-3B-Instruct 模型的權限。

若要啟動多圖生成應用程式,請依照下列步驟執行:

# 1: Set HF Token as Env variable

export HUGGINGFACE_TOKEN=<HUGGINGFACE_TOKEN>

# 2: Build Docker image for this Multi-Image Generation App

git clone https://github.com/pytorch/serve.git

cd serve

./examples/usecases/llm_diffusion_serving_app/docker/build_image.sh

# 3: Launch the streamlit app for server & client

# After the Docker build is successful, you will see a "docker run" command printed to the console.

# Run that "docker run" command to launch the Streamlit app for both the server and client.

Docker 建置的範例輸出:¶

ubuntu@ip-10-0-0-137:~/serve$ ./examples/usecases/llm_diffusion_serving_app/docker/build_image.sh

EXAMPLE_DIR: .//examples/usecases/llm_diffusion_serving_app/docker

ROOT_DIR: /home/ubuntu/serve

DOCKER_BUILDKIT=1 docker buildx build --platform=linux/amd64 --file .//examples/usecases/llm_diffusion_serving_app/docker/Dockerfile --build-arg BASE_IMAGE="pytorch/torchserve:latest-cpu" --build-arg EXAMPLE_DIR=".//examples/usecases/llm_diffusion_serving_app/docker" --build-arg HUGGINGFACE_TOKEN=hf_<token> --build-arg HTTP_PROXY= --build-arg HTTPS_PROXY= --build-arg NO_PROXY= -t "pytorch/torchserve:llm_diffusion_serving_app" .

[+] Building 1.4s (18/18) FINISHED docker:default

=> [internal] load .dockerignore 0.0s

.

.

.

=> => naming to docker.io/pytorch/torchserve:llm_diffusion_serving_app 0.0s

Docker Build Successful !

............................ Next Steps ............................

--------------------------------------------------------------------

[Optional] Run the following command to benchmark Stable Diffusion:

--------------------------------------------------------------------

docker run --rm --platform linux/amd64 \

--name llm_sd_app_bench \

-v /home/ubuntu/serve/model-store-local:/home/model-server/model-store \

--entrypoint python \

pytorch/torchserve:llm_diffusion_serving_app \

/home/model-server/llm_diffusion_serving_app/sd-benchmark.py -ni 3

-------------------------------------------------------------------

Run the following command to start the Multi-Image generation App:

-------------------------------------------------------------------

docker run --rm -it --platform linux/amd64 \

--name llm_sd_app \

-p 127.0.0.1:8080:8080 \

-p 127.0.0.1:8081:8081 \

-p 127.0.0.1:8082:8082 \

-p 127.0.0.1:8084:8084 \

-p 127.0.0.1:8085:8085 \

-v /home/ubuntu/serve/model-store-local:/home/model-server/model-store \

-e MODEL_NAME_LLM=meta-llama/Llama-3.2-3B-Instruct \

-e MODEL_NAME_SD=stabilityai/stable-diffusion-xl-base-1.0 \

pytorch/torchserve:llm_diffusion_serving_app

Note: You can replace the model identifiers (MODEL_NAME_LLM, MODEL_NAME_SD) as needed.

預期結果¶

在使用成功建置後顯示的 docker run .. 指令啟動 Docker 容器後,您可以存取兩個不同的 Streamlit 應用程式:

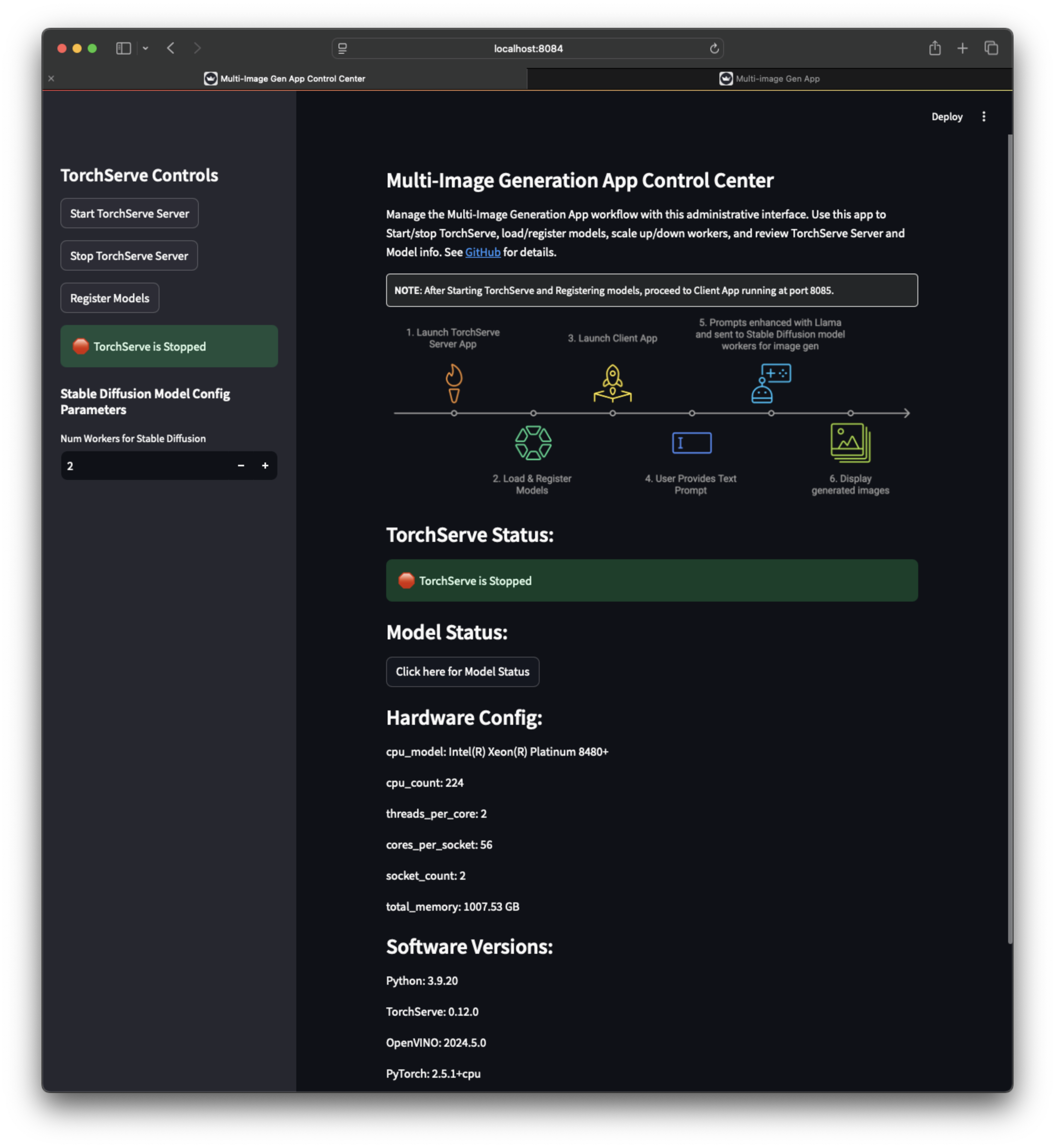

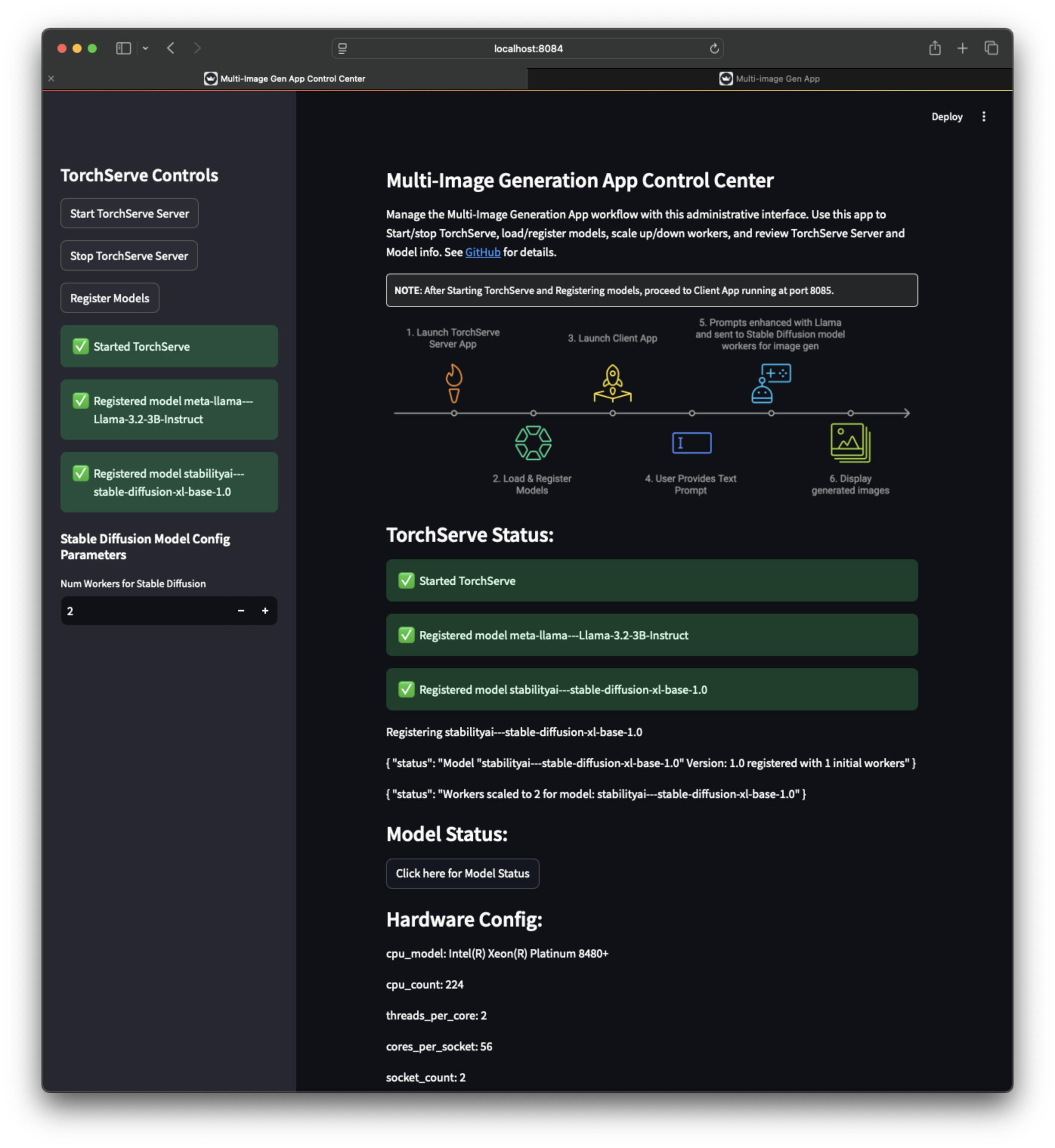

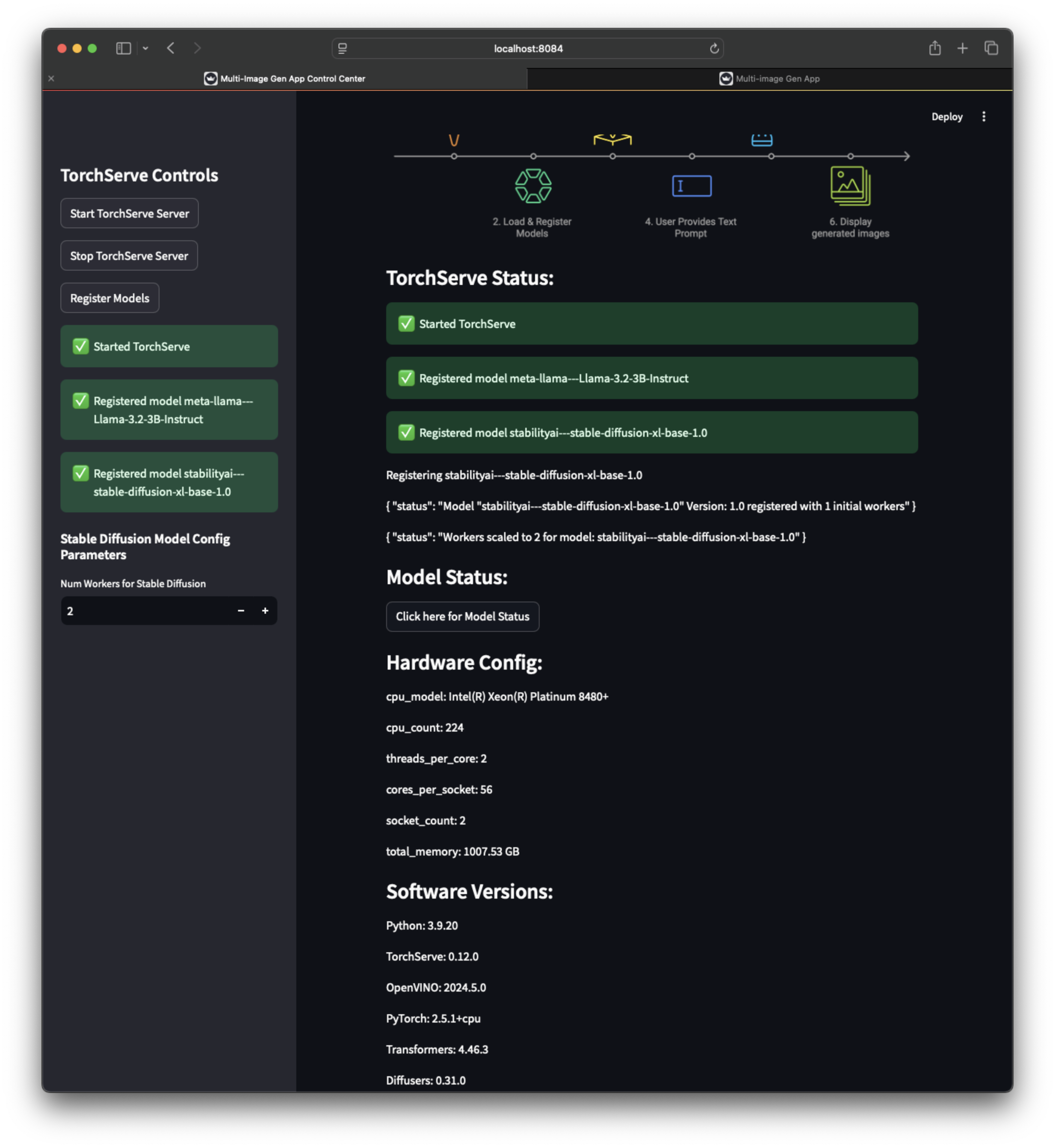

TorchServe 伺服器應用程式(在 https://127.0.0.1:8084 上執行)以啟動/停止 TorchServe、載入/註冊模型、擴增/縮減 worker。

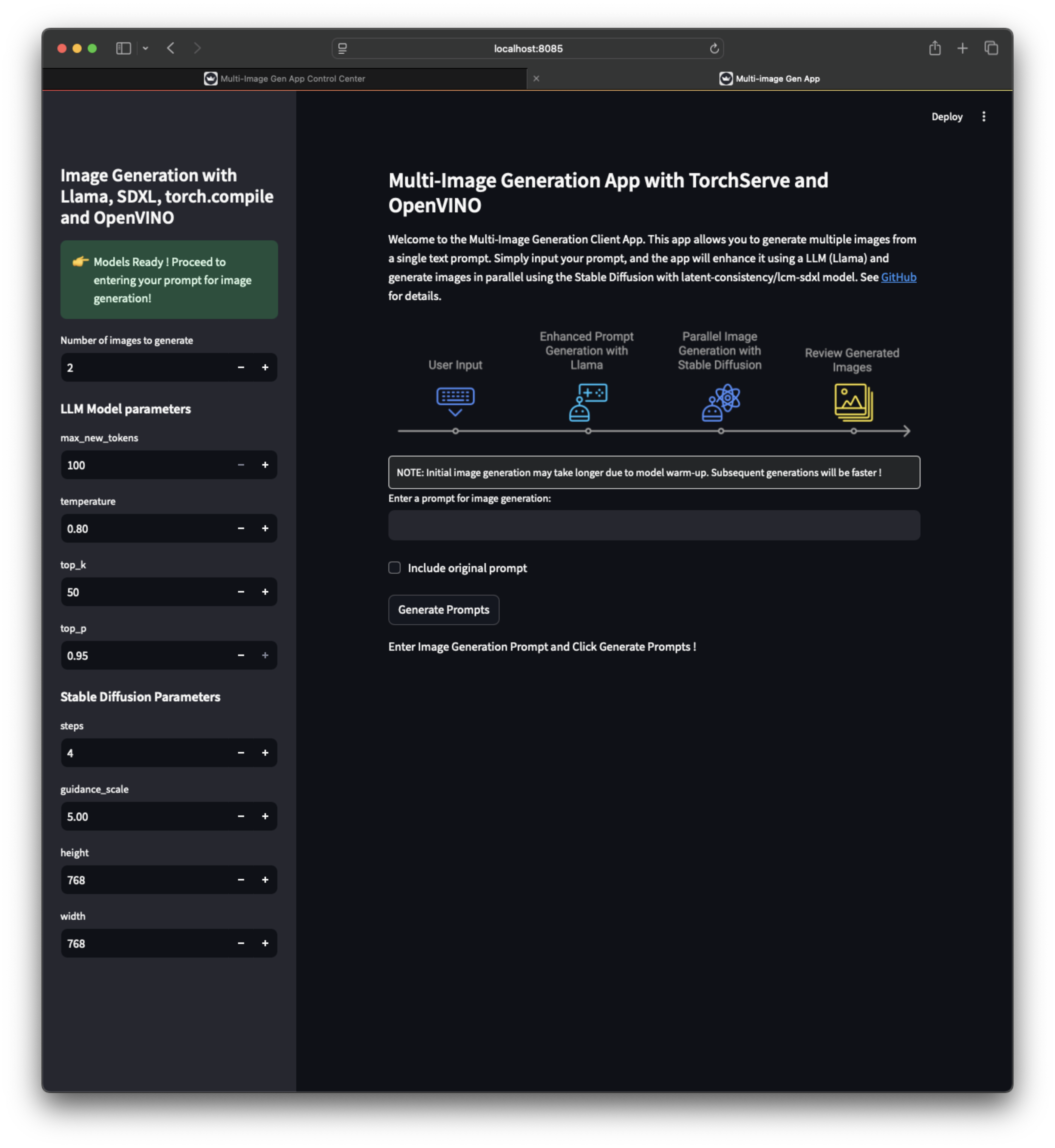

客戶端應用程式(在 https://127.0.0.1:8085 上執行),您可以在其中輸入用於圖片生成的提示。

注意:您還可以執行一個快速基準測試,比較使用 Eager、使用 inductor 和 openvino 進行 torch.compile 的 Stable Diffusion 的效能。檢閱成功建置後顯示的

docker run ..指令以進行基準測試

啟動應用程式的範例輸出:¶

ubuntu@ip-10-0-0-137:~/serve$ docker run --rm -it --platform linux/amd64 \

--name llm_sd_app \

-p 127.0.0.1:8080:8080 \

-p 127.0.0.1:8081:8081 \

-p 127.0.0.1:8082:8082 \

-p 127.0.0.1:8084:8084 \

-p 127.0.0.1:8085:8085 \

-v /home/ubuntu/serve/model-store-local:/home/model-server/model-store \

-e MODEL_NAME_LLM=meta-llama/Llama-3.2-3B-Instruct \

-e MODEL_NAME_SD=stabilityai/stable-diffusion-xl-base-1.0 \

pytorch/torchserve:llm_diffusion_serving_app

Preparing meta-llama/Llama-3.2-1B-Instruct

/home/model-server/llm_diffusion_serving_app/llm /home/model-server/llm_diffusion_serving_app

Model meta-llama---Llama-3.2-1B-Instruct already downloaded.

Model archive for meta-llama---Llama-3.2-1B-Instruct exists.

/home/model-server/llm_diffusion_serving_app

Preparing stabilityai/stable-diffusion-xl-base-1.0

/home/model-server/llm_diffusion_serving_app/sd /home/model-server/llm_diffusion_serving_app

Model stabilityai/stable-diffusion-xl-base-1.0 already downloaded

Model archive for stabilityai---stable-diffusion-xl-base-1.0 exists.

/home/model-server/llm_diffusion_serving_app

Collecting usage statistics. To deactivate, set browser.gatherUsageStats to false.

Collecting usage statistics. To deactivate, set browser.gatherUsageStats to false.

You can now view your Streamlit app in your browser.

Local URL: https://127.0.0.1:8085

Network URL: http://123.11.0.2:8085

External URL: http://123.123.12.34:8085

You can now view your Streamlit app in your browser.

Local URL: https://127.0.0.1:8084

Network URL: http://123.11.0.2:8084

External URL: http://123.123.12.34:8084

Stable Diffusion 基準測試的範例輸出:¶

若要執行 Stable Diffusion 基準測試,請使用 sd-benchmark.py。請參閱下方的詳細資訊,以取得範例主控台輸出。

ubuntu@ip-10-0-0-137:~/serve$ docker run --rm --platform linux/amd64 \

--name llm_sd_app_bench \

-v /home/ubuntu/serve/model-store-local:/home/model-server/model-store \

--entrypoint python \

pytorch/torchserve:llm_diffusion_serving_app \

/home/model-server/llm_diffusion_serving_app/sd-benchmark.py -ni 3

.

.

.

Hardware Info:

--------------------------------------------------------------------------------

cpu_model: Intel(R) Xeon(R) Platinum 8488C

cpu_count: 64

threads_per_core: 2

cores_per_socket: 32

socket_count: 1

total_memory: 247.71 GB

Software Versions:

--------------------------------------------------------------------------------

Python: 3.9.20

TorchServe: 0.12.0

OpenVINO: 2024.5.0

PyTorch: 2.5.1+cpu

Transformers: 4.46.3

Diffusers: 0.31.0

Benchmark Summary:

--------------------------------------------------------------------------------

+-------------+----------------+---------------------------+

| Run Mode | Warm-up Time | Average Time for 3 iter |

+=============+================+===========================+

| eager | 11.25 seconds | 10.13 +/- 0.02 seconds |

+-------------+----------------+---------------------------+

| tc_inductor | 85.40 seconds | 8.85 +/- 0.03 seconds |

+-------------+----------------+---------------------------+

| tc_openvino | 52.57 seconds | 2.58 +/- 0.04 seconds |

+-------------+----------------+---------------------------+

Results saved in directory: /home/model-server/model-store/benchmark_results_20241123_071103

Files in the /home/model-server/model-store/benchmark_results_20241123_071103 directory:

benchmark_results.json

image-eager-final.png

image-tc_inductor-final.png

image-tc_openvino-final.png

Results saved at /home/model-server/model-store/ which is a Docker container mount, corresponds to 'serve/model-store-local/' on the host machine.

使用分析進行 Stable Diffusion 基準測試的範例輸出:¶

若要執行使用分析進行 Stable Diffusion 基準測試,請使用 --run_profiling 或 -rp。請參閱下方的詳細資訊,以取得範例主控台輸出。範例分析基準測試輸出檔案可在 assets/benchmark_results_20241123_044407/ 中取得

ubuntu@ip-10-0-0-137:~/serve$ docker run --rm --platform linux/amd64 \

--name llm_sd_app_bench \

-v /home/ubuntu/serve/model-store-local:/home/model-server/model-store \

--entrypoint python \

pytorch/torchserve:llm_diffusion_serving_app \

/home/model-server/llm_diffusion_serving_app/sd-benchmark.py -rp

.

.

.

Hardware Info:

--------------------------------------------------------------------------------

cpu_model: Intel(R) Xeon(R) Platinum 8488C

cpu_count: 64

threads_per_core: 2

cores_per_socket: 32

socket_count: 1

total_memory: 247.71 GB

Software Versions:

--------------------------------------------------------------------------------

Python: 3.9.20

TorchServe: 0.12.0

OpenVINO: 2024.5.0

PyTorch: 2.5.1+cpu

Transformers: 4.46.3

Diffusers: 0.31.0

Benchmark Summary:

--------------------------------------------------------------------------------

+-------------+----------------+---------------------------+

| Run Mode | Warm-up Time | Average Time for 1 iter |

+=============+================+===========================+

| eager | 9.33 seconds | 8.57 +/- 0.00 seconds |

+-------------+----------------+---------------------------+

| tc_inductor | 81.11 seconds | 7.20 +/- 0.00 seconds |

+-------------+----------------+---------------------------+

| tc_openvino | 50.76 seconds | 1.72 +/- 0.00 seconds |

+-------------+----------------+---------------------------+

Results saved in directory: /home/model-server/model-store/benchmark_results_20241123_071629

Files in the /home/model-server/model-store/benchmark_results_20241123_071629 directory:

benchmark_results.json

image-eager-final.png

image-tc_inductor-final.png

image-tc_openvino-final.png

profile-eager.txt

profile-tc_inductor.txt

profile-tc_openvino.txt

num_iter is set to 1 as run_profiling flag is enabled !

Results saved at /home/model-server/model-store/ which is a Docker container mount, corresponds to 'serve/model-store-local/' on the host machine.

多圖片生成應用程式 UI¶

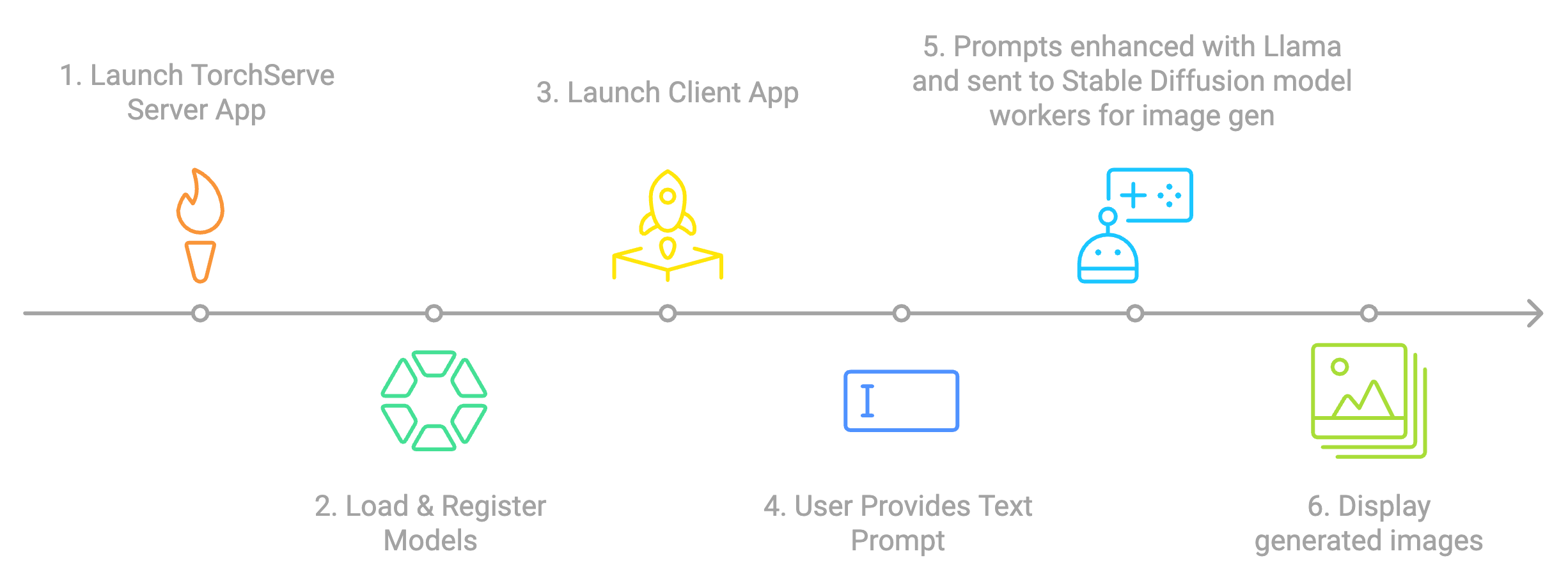

應用程式工作流程¶

應用程式螢幕截圖¶

| 伺服器應用程式螢幕截圖 1 | 伺服器應用程式螢幕截圖 2 | 伺服器應用程式螢幕截圖 3 |

|---|---|---|

|

|

|

| 客戶端應用程式螢幕截圖 1 | 客戶端應用程式螢幕截圖 2 | 客戶端應用程式螢幕截圖 3 |

|---|---|---|

|

|

|