注意

前往結尾以下載完整的範例程式碼。

Pendulum:使用 TorchRL 撰寫您的環境和轉換¶

建立環境 (模擬器或物理控制系統的介面) 是強化學習和控制工程中不可或缺的一部分。

TorchRL 提供了一組工具來在多種情況下執行此操作。本教學示範如何使用 PyTorch 和 TorchRL 從頭開始編寫 pendulum 模擬器。它很大程度上受到來自 OpenAI-Gym/Farama-Gymnasium 控制庫 的 Pendulum-v1 實作的啟發。

簡單 Pendulum¶

重要學習

如何在 TorchRL 中設計環境:- 撰寫規格 (輸入、觀察和獎勵);- 實作行為:播種、重設和步進。

轉換您的環境輸入和輸出,並撰寫您自己的轉換;

如何使用

TensorDict在程式碼庫中傳輸任意資料結構。在此過程中,我們將接觸到 TorchRL 的三個關鍵元件

為了讓您了解使用 TorchRL 的環境可以實現什麼,我們將設計一個無狀態環境。雖然有狀態的環境會追蹤遇到的最新物理狀態,並依靠它來模擬狀態到狀態的轉換,但無狀態的環境期望在每個步驟中將目前狀態提供給它們,以及所採取的動作。TorchRL 支援這兩種環境類型,但無狀態環境更通用,因此涵蓋了 TorchRL 中環境 API 的更廣泛功能。

對無狀態環境進行建模可讓使用者完全控制模擬器的輸入和輸出:人們可以在任何階段重設實驗或從外部主動修改動態。但是,它假設我們對任務有一定的控制權,而情況可能並非總是如此:解決我們無法控制目前狀態的問題更具挑戰性,但具有更廣泛的應用範圍。

無狀態環境的另一個優點是它們可以實現轉換模擬的批次執行。如果後端和實作允許,則可以在純量、向量或張量上無縫執行代數運算。本教學提供了一些這樣的範例。

本教學的結構如下

我們將首先熟悉環境屬性:其形狀 (

batch_size)、其方法 (主要是step()、reset()和set_seed()) 以及最後的規格。在編寫完我們的模擬器後,我們將展示如何在訓練中使用轉換。

我們將探索 TorchRL API 延伸出的新途徑,包括:轉換輸入的可能性、模擬的向量化執行以及透過模擬圖進行反向傳播的可能性。

最後,我們將訓練一個簡單的策略來解決我們實作的系統。

這個環境的內建版本可以在 ~torchrl.envs.PendulumEnv 類別中找到。

from collections import defaultdict

from typing import Optional

import numpy as np

import torch

import tqdm

from tensordict import TensorDict, TensorDictBase

from tensordict.nn import TensorDictModule

from torch import nn

from torchrl.data import Bounded, Composite, Unbounded

from torchrl.envs import (

CatTensors,

EnvBase,

Transform,

TransformedEnv,

UnsqueezeTransform,

)

from torchrl.envs.transforms.transforms import _apply_to_composite

from torchrl.envs.utils import check_env_specs, step_mdp

DEFAULT_X = np.pi

DEFAULT_Y = 1.0

設計新的環境類別時,有四件事需要注意:

EnvBase._reset(),負責編寫在(可能隨機的)初始狀態下重置模擬器的程式碼;EnvBase._step(),負責編寫狀態轉移動態的程式碼;EnvBase._set_seed(),實作隨機種子機制;環境規格。

讓我們先描述手邊的問題:我們想要模擬一個簡單的單擺,我們可以控制施加在其固定點上的扭矩。我們的目標是將單擺置於向上位置(按照慣例,角度位置為 0),並使其保持靜止在該位置。為了設計我們的動態系統,我們需要定義兩個方程式:依循動作(施加的扭矩)的運動方程式,以及構成我們目標函數的獎勵方程式。

對於運動方程式,我們將依循以下公式更新角速度

其中 \(\dot{\theta}\) 是以弧度/秒為單位的角速度,\(g\) 是重力,\(L\) 是單擺長度,\(m\) 是其質量,\(\theta\) 是其角度位置,而 \(u\) 是扭矩。然後根據以下公式更新角度位置

我們將獎勵定義為

當角度接近 0(單擺在向上位置)、角速度接近 0(沒有運動)且扭矩也為 0 時,獎勵將最大化。

編寫動作效果的程式碼:_step()¶

首先要考慮的是 step 方法,因為它將編碼我們感興趣的模擬。在 TorchRL 中,EnvBase 類別有一個 EnvBase.step() 方法,該方法接收一個 tensordict.TensorDict 實例,其中包含一個 "action" 條目,指示要採取的動作。

為了方便從該 tensordict 讀取和寫入,並確保金鑰與函式庫的預期一致,模擬部分已委託給一個私有的抽象方法 _step(),該方法從 tensordict 讀取輸入資料,並寫入一個帶有輸出資料的新的 tensordict。

_step() 方法應執行以下操作:

讀取輸入金鑰(例如

"action")並根據這些金鑰執行模擬;檢索觀察值、完成狀態和獎勵;

將一組觀察值以及獎勵和完成狀態寫入新

TensorDict中相應的條目。

接下來,step() 方法將合併 step() 的輸出到輸入 tensordict 中,以強制執行輸入/輸出一致性。

通常,對於有狀態的環境,它看起來會像這樣

>>> policy(env.reset())

>>> print(tensordict)

TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=cpu,

is_shared=False)

>>> env.step(tensordict)

>>> print(tensordict)

TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

reward: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=cpu,

is_shared=False),

observation: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=cpu,

is_shared=False)

請注意,根 tensordict 沒有改變,唯一的修改是出現了一個包含新資訊的新 "next" 條目。

在單擺範例中,我們的 _step() 方法將從輸入 tensordict 讀取相關條目,並在由 "action" 金鑰編碼的力施加到單擺上後,計算單擺的位置和速度。我們計算單擺的新角度位置 "new_th",作為先前位置 "th" 加上新速度 "new_thdot" 在時間間隔 dt 內的結果。

由於我們的目標是將單擺向上旋轉並使其保持靜止在該位置,因此對於接近目標和低速的位置,我們的 cost(負獎勵)函數會較低。實際上,我們不鼓勵遠離「向上」和/或遠離 0 的速度的位置。

在我們的範例中,EnvBase._step() 被編碼為靜態方法,因為我們的環境是無狀態的。在有狀態的設定中,需要 self 引數,因為需要從環境中讀取狀態。

def _step(tensordict):

th, thdot = tensordict["th"], tensordict["thdot"] # th := theta

g_force = tensordict["params", "g"]

mass = tensordict["params", "m"]

length = tensordict["params", "l"]

dt = tensordict["params", "dt"]

u = tensordict["action"].squeeze(-1)

u = u.clamp(-tensordict["params", "max_torque"], tensordict["params", "max_torque"])

costs = angle_normalize(th) ** 2 + 0.1 * thdot**2 + 0.001 * (u**2)

new_thdot = (

thdot

+ (3 * g_force / (2 * length) * th.sin() + 3.0 / (mass * length**2) * u) * dt

)

new_thdot = new_thdot.clamp(

-tensordict["params", "max_speed"], tensordict["params", "max_speed"]

)

new_th = th + new_thdot * dt

reward = -costs.view(*tensordict.shape, 1)

done = torch.zeros_like(reward, dtype=torch.bool)

out = TensorDict(

{

"th": new_th,

"thdot": new_thdot,

"params": tensordict["params"],

"reward": reward,

"done": done,

},

tensordict.shape,

)

return out

def angle_normalize(x):

return ((x + torch.pi) % (2 * torch.pi)) - torch.pi

重置模擬器:_reset()¶

第二個需要關注的方法是 _reset() 方法。和 _step() 一樣,它應該將觀測值寫入輸出的 tensordict 中,並且可能還會寫入一個 done 狀態(如果 done 狀態被省略,父方法 reset() 會將其填寫為 False)。在某些情況下,_reset 方法需要接收來自調用它的函數的指令(例如,在多代理設定中,我們可能想指示哪些代理需要重置)。這就是為什麼 _reset() 方法也期望一個 tensordict 作為輸入,儘管它可以完全是空的或 None。

父類別 EnvBase.reset() 執行一些簡單的檢查,就像 EnvBase.step() 一樣,例如確保在輸出的 tensordict 中返回一個 "done" 狀態,並且形狀符合規格的預期。

對我們而言,唯一需要考慮的重要事項是 EnvBase._reset() 是否包含所有預期的觀測值。再一次,由於我們正在使用一個無狀態環境,我們將鐘擺的配置傳遞到一個名為 "params" 的嵌套 tensordict 中。

在這個例子中,我們沒有傳遞 done 狀態,因為這對於 _reset() 來說不是強制性的,並且我們的環境是非終止的,所以我們總是期望它是 False。

def _reset(self, tensordict):

if tensordict is None or tensordict.is_empty():

# if no ``tensordict`` is passed, we generate a single set of hyperparameters

# Otherwise, we assume that the input ``tensordict`` contains all the relevant

# parameters to get started.

tensordict = self.gen_params(batch_size=self.batch_size)

high_th = torch.tensor(DEFAULT_X, device=self.device)

high_thdot = torch.tensor(DEFAULT_Y, device=self.device)

low_th = -high_th

low_thdot = -high_thdot

# for non batch-locked environments, the input ``tensordict`` shape dictates the number

# of simulators run simultaneously. In other contexts, the initial

# random state's shape will depend upon the environment batch-size instead.

th = (

torch.rand(tensordict.shape, generator=self.rng, device=self.device)

* (high_th - low_th)

+ low_th

)

thdot = (

torch.rand(tensordict.shape, generator=self.rng, device=self.device)

* (high_thdot - low_thdot)

+ low_thdot

)

out = TensorDict(

{

"th": th,

"thdot": thdot,

"params": tensordict["params"],

},

batch_size=tensordict.shape,

)

return out

環境元數據: env.*_spec¶

規格定義了環境的輸入和輸出域。重要的是,規格要準確地定義在運行時將接收到的張量,因為它們經常被用於在多處理和分散式設定中攜帶關於環境的信息。它們也可以被用於實例化延遲定義的神經網路和測試腳本,而無需實際查詢環境(例如,對於真實世界的物理系統而言,這可能是昂貴的)。

我們必須在環境中編碼四個規格

EnvBase.observation_spec:這將是一個CompositeSpec實例,其中每個鍵都是一個觀測值(一個CompositeSpec可以被視為規格的字典)。EnvBase.action_spec:它可以是任何類型的規格,但它必須對應於輸入tensordict中的"action"條目;EnvBase.reward_spec:提供關於獎勵空間的信息;EnvBase.done_spec:提供關於 done 標誌空間的信息。

TorchRL 規格被組織成兩個通用容器:input_spec,它包含 step 函數讀取的信息的規格(分為包含動作的 action_spec 和包含所有其他信息的 state_spec),以及 output_spec,它編碼了 step 輸出的規格(observation_spec、reward_spec 和 done_spec)。通常,你不應該直接與 output_spec 和 input_spec 交互,而只能與它們的內容交互:observation_spec、reward_spec、done_spec、action_spec 和 state_spec。原因是這些規格以一種非平凡的方式組織在 output_spec 和 input_spec 中,並且它們都不應該被直接修改。

換句話說,observation_spec 和相關屬性是通往輸出和輸入規格容器內容的便捷捷徑。

TorchRL 提供了多個 TensorSpec 子類別 來編碼環境的輸入和輸出特性。

規格形狀¶

環境規格的前導維度必須與環境的批量大小相符。這樣做的目的是確保環境的每個組件(包括其轉換)都能準確表示預期的輸入和輸出形狀。這是在有狀態設定中應該準確編碼的東西。

對於非批量鎖定的環境,例如我們例子中的環境(見下文),這無關緊要,因為環境的批量大小很可能是空的。

def _make_spec(self, td_params):

# Under the hood, this will populate self.output_spec["observation"]

self.observation_spec = Composite(

th=Bounded(

low=-torch.pi,

high=torch.pi,

shape=(),

dtype=torch.float32,

),

thdot=Bounded(

low=-td_params["params", "max_speed"],

high=td_params["params", "max_speed"],

shape=(),

dtype=torch.float32,

),

# we need to add the ``params`` to the observation specs, as we want

# to pass it at each step during a rollout

params=make_composite_from_td(td_params["params"]),

shape=(),

)

# since the environment is stateless, we expect the previous output as input.

# For this, ``EnvBase`` expects some state_spec to be available

self.state_spec = self.observation_spec.clone()

# action-spec will be automatically wrapped in input_spec when

# `self.action_spec = spec` will be called supported

self.action_spec = Bounded(

low=-td_params["params", "max_torque"],

high=td_params["params", "max_torque"],

shape=(1,),

dtype=torch.float32,

)

self.reward_spec = Unbounded(shape=(*td_params.shape, 1))

def make_composite_from_td(td):

# custom function to convert a ``tensordict`` in a similar spec structure

# of unbounded values.

composite = Composite(

{

key: make_composite_from_td(tensor)

if isinstance(tensor, TensorDictBase)

else Unbounded(dtype=tensor.dtype, device=tensor.device, shape=tensor.shape)

for key, tensor in td.items()

},

shape=td.shape,

)

return composite

可重複的實驗:播種¶

播種一個環境是初始化實驗的常見操作。EnvBase._set_seed() 的唯一目標是設置包含的模擬器的種子。如果可能,此操作不應調用 reset() 或與環境執行互動。父方法 EnvBase.set_seed() 包含一種機制,該機制允許用不同的偽隨機和可重複的種子來播種多個環境。

def _set_seed(self, seed: Optional[int]):

rng = torch.manual_seed(seed)

self.rng = rng

整合所有東西:EnvBase 類別¶

我們終於可以將所有部分整合起來,並設計我們的環境類別。規格初始化需要在環境建構期間執行,因此我們必須注意在 PendulumEnv.__init__() 中呼叫 _make_spec() 方法。

我們新增一個靜態方法 PendulumEnv.gen_params(),它會確定性地產生一組在執行期間使用的超參數

def gen_params(g=10.0, batch_size=None) -> TensorDictBase:

"""Returns a ``tensordict`` containing the physical parameters such as gravitational force and torque or speed limits."""

if batch_size is None:

batch_size = []

td = TensorDict(

{

"params": TensorDict(

{

"max_speed": 8,

"max_torque": 2.0,

"dt": 0.05,

"g": g,

"m": 1.0,

"l": 1.0,

},

[],

)

},

[],

)

if batch_size:

td = td.expand(batch_size).contiguous()

return td

我們將環境定義為非 batch_locked,方法是將 homonymous 屬性設為 False。這表示我們**不會**強制輸入的 tensordict 具有與環境批次大小相符的 batch-size。

以下程式碼只是將我們上面編碼的所有部分整合在一起。

class PendulumEnv(EnvBase):

metadata = {

"render_modes": ["human", "rgb_array"],

"render_fps": 30,

}

batch_locked = False

def __init__(self, td_params=None, seed=None, device="cpu"):

if td_params is None:

td_params = self.gen_params()

super().__init__(device=device, batch_size=[])

self._make_spec(td_params)

if seed is None:

seed = torch.empty((), dtype=torch.int64).random_().item()

self.set_seed(seed)

# Helpers: _make_step and gen_params

gen_params = staticmethod(gen_params)

_make_spec = _make_spec

# Mandatory methods: _step, _reset and _set_seed

_reset = _reset

_step = staticmethod(_step)

_set_seed = _set_seed

測試我們的環境¶

TorchRL 提供了一個簡單的函式 check_env_specs(),用於檢查(經過轉換的)環境是否具有與其規格所指示的相符的輸入/輸出結構。讓我們來試試看

env = PendulumEnv()

check_env_specs(env)

我們可以查看我們的規格,以獲得環境簽章的可視化表示

print("observation_spec:", env.observation_spec)

print("state_spec:", env.state_spec)

print("reward_spec:", env.reward_spec)

observation_spec: Composite(

th: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

thdot: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

params: Composite(

max_speed: UnboundedDiscrete(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True)),

device=cpu,

dtype=torch.int64,

domain=discrete),

max_torque: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

dt: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

g: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

m: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

l: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

device=cpu,

shape=torch.Size([])),

device=cpu,

shape=torch.Size([]))

state_spec: Composite(

th: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

thdot: BoundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

params: Composite(

max_speed: UnboundedDiscrete(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, contiguous=True)),

device=cpu,

dtype=torch.int64,

domain=discrete),

max_torque: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

dt: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

g: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

m: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

l: UnboundedContinuous(

shape=torch.Size([]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous),

device=cpu,

shape=torch.Size([])),

device=cpu,

shape=torch.Size([]))

reward_spec: UnboundedContinuous(

shape=torch.Size([1]),

space=ContinuousBox(

low=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True),

high=Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, contiguous=True)),

device=cpu,

dtype=torch.float32,

domain=continuous)

我們也可以執行幾個命令來檢查輸出結構是否符合預期。

td = env.reset()

print("reset tensordict", td)

reset tensordict TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

我們可以執行 env.rand_step(),從 action_spec 域隨機產生一個動作。由於我們的環境是無狀態的,因此**必須**傳遞包含超參數和目前狀態的 tensordict。在有狀態的環境中,env.rand_step() 也運作良好。

td = env.rand_step(td)

print("random step tensordict", td)

random step tensordict TensorDict(

fields={

action: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

done: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False),

terminated: Tensor(shape=torch.Size([1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([]),

device=None,

is_shared=False)

轉換環境¶

為無狀態模擬器編寫環境轉換比為有狀態模擬器編寫環境轉換稍微複雜一些:轉換需要在下一次迭代中讀取的輸出條目需要在下一個步驟呼叫 meth.step() 之前應用反向轉換。這是展示 TorchRL 轉換所有功能的理想場景!

例如,在以下轉換後的環境中,我們 unsqueeze 條目 ["th", "thdot"],以便能夠沿著最後一個維度堆疊它們。我們也將它們作為 in_keys_inv 傳遞,以便在它們在下一次迭代中作為輸入傳遞時,將它們擠回原始形狀。

env = TransformedEnv(

env,

# ``Unsqueeze`` the observations that we will concatenate

UnsqueezeTransform(

dim=-1,

in_keys=["th", "thdot"],

in_keys_inv=["th", "thdot"],

),

)

編寫自訂轉換¶

TorchRL 的轉換可能無法涵蓋在環境執行後想要執行的所有操作。編寫轉換不需要太多努力。與環境設計一樣,編寫轉換有兩個步驟

取得正確的動態(正向和反向);

調整環境規格。

轉換可以在兩種設定中使用:單獨使用時,它可以作為 Module。它也可以附加到 TransformedEnv。該類別的結構允許自訂不同環境中的行為。

Transform 骨架可以總結如下

class Transform(nn.Module):

def forward(self, tensordict):

...

def _apply_transform(self, tensordict):

...

def _step(self, tensordict):

...

def _call(self, tensordict):

...

def inv(self, tensordict):

...

def _inv_apply_transform(self, tensordict):

...

有三個入口點(forward()、_step() 和 inv()),它們都接收 tensordict.TensorDict 實例。前兩個最終會透過 in_keys 指示的鍵,並對每個鍵呼叫 _apply_transform()。結果將被寫入 Transform.out_keys 指向的條目中(如果未提供,則 in_keys 將會使用轉換後的值更新)。如果需要執行反向轉換,則會執行類似的資料流,但使用 Transform.inv() 和 Transform._inv_apply_transform() 方法,並跨 in_keys_inv 和 out_keys_inv 鍵清單。下圖總結了環境和重播緩衝區的流程。

轉換 API

在某些情況下,轉換不會以單一方式處理鍵的子集,而是會在父環境上執行某些操作或處理整個輸入 tensordict。在這些情況下,應該重新編寫 _call() 和 forward() 方法,並且可以跳過 _apply_transform() 方法。

讓我們編寫新的轉換,它將計算位置角度的 sine 和 cosine 值,因為這些值對我們學習策略更有用,而不是原始角度值

class SinTransform(Transform):

def _apply_transform(self, obs: torch.Tensor) -> None:

return obs.sin()

# The transform must also modify the data at reset time

def _reset(

self, tensordict: TensorDictBase, tensordict_reset: TensorDictBase

) -> TensorDictBase:

return self._call(tensordict_reset)

# _apply_to_composite will execute the observation spec transform across all

# in_keys/out_keys pairs and write the result in the observation_spec which

# is of type ``Composite``

@_apply_to_composite

def transform_observation_spec(self, observation_spec):

return Bounded(

low=-1,

high=1,

shape=observation_spec.shape,

dtype=observation_spec.dtype,

device=observation_spec.device,

)

class CosTransform(Transform):

def _apply_transform(self, obs: torch.Tensor) -> None:

return obs.cos()

# The transform must also modify the data at reset time

def _reset(

self, tensordict: TensorDictBase, tensordict_reset: TensorDictBase

) -> TensorDictBase:

return self._call(tensordict_reset)

# _apply_to_composite will execute the observation spec transform across all

# in_keys/out_keys pairs and write the result in the observation_spec which

# is of type ``Composite``

@_apply_to_composite

def transform_observation_spec(self, observation_spec):

return Bounded(

low=-1,

high=1,

shape=observation_spec.shape,

dtype=observation_spec.dtype,

device=observation_spec.device,

)

t_sin = SinTransform(in_keys=["th"], out_keys=["sin"])

t_cos = CosTransform(in_keys=["th"], out_keys=["cos"])

env.append_transform(t_sin)

env.append_transform(t_cos)

TransformedEnv(

env=PendulumEnv(),

transform=Compose(

UnsqueezeTransform(dim=-1, in_keys=['th', 'thdot'], out_keys=['th', 'thdot'], in_keys_inv=['th', 'thdot'], out_keys_inv=['th', 'thdot']),

SinTransform(keys=['th']),

CosTransform(keys=['th'])))

將觀測值串連到一個 “observation” 條目上。del_keys=False 確保我們為下一個迭代保留這些值。

cat_transform = CatTensors(

in_keys=["sin", "cos", "thdot"], dim=-1, out_key="observation", del_keys=False

)

env.append_transform(cat_transform)

TransformedEnv(

env=PendulumEnv(),

transform=Compose(

UnsqueezeTransform(dim=-1, in_keys=['th', 'thdot'], out_keys=['th', 'thdot'], in_keys_inv=['th', 'thdot'], out_keys_inv=['th', 'thdot']),

SinTransform(keys=['th']),

CosTransform(keys=['th']),

CatTensors(in_keys=['cos', 'sin', 'thdot'], out_key=observation)))

再次,讓我們檢查環境規格是否與接收到的規格相符

check_env_specs(env)

執行 rollout¶

執行 rollout 是一連串的簡單步驟

重置環境

當某個條件不滿足時

根據策略計算一個動作

根據這個動作執行一個步驟

收集資料

進行一個

MDP步驟

收集資料並返回

這些操作已經方便地封裝在 rollout() 方法中,我們在下面提供了一個簡化版本。

def simple_rollout(steps=100):

# preallocate:

data = TensorDict({}, [steps])

# reset

_data = env.reset()

for i in range(steps):

_data["action"] = env.action_spec.rand()

_data = env.step(_data)

data[i] = _data

_data = step_mdp(_data, keep_other=True)

return data

print("data from rollout:", simple_rollout(100))

data from rollout: TensorDict(

fields={

action: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

cos: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

cos: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([100, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

sin: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([100, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([100]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([100, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([100]),

device=None,

is_shared=False)

批量計算¶

本教程最後一個尚未探索的部分是我們在 TorchRL 中進行批量計算的能力。由於我們的環境對輸入資料的形狀沒有任何假設,我們可以無縫地在批次的資料上執行它。更好的是:對於非批次鎖定的環境,例如我們的 Pendulum,我們可以在運行時更改批次大小,而無需重新建立環境。為此,我們只需生成具有所需形狀的參數。

batch_size = 10 # number of environments to be executed in batch

td = env.reset(env.gen_params(batch_size=[batch_size]))

print("reset (batch size of 10)", td)

td = env.rand_step(td)

print("rand step (batch size of 10)", td)

reset (batch size of 10) TensorDict(

fields={

cos: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False)

rand step (batch size of 10) TensorDict(

fields={

action: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

cos: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

cos: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

sin: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10]),

device=None,

is_shared=False)

使用一批資料執行 rollout 需要我們在 rollout 函數之外重置環境,因為我們需要動態定義 batch_size,而 rollout() 不支援此操作。

rollout = env.rollout(

3,

auto_reset=False, # we're executing the reset out of the ``rollout`` call

tensordict=env.reset(env.gen_params(batch_size=[batch_size])),

)

print("rollout of len 3 (batch size of 10):", rollout)

rollout of len 3 (batch size of 10): TensorDict(

fields={

action: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

cos: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

next: TensorDict(

fields={

cos: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

done: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

observation: Tensor(shape=torch.Size([10, 3, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False),

reward: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

sin: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False),

observation: Tensor(shape=torch.Size([10, 3, 3]), device=cpu, dtype=torch.float32, is_shared=False),

params: TensorDict(

fields={

dt: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

g: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

l: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

m: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False),

max_speed: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.int64, is_shared=False),

max_torque: Tensor(shape=torch.Size([10, 3]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False),

sin: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

terminated: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.bool, is_shared=False),

th: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False),

thdot: Tensor(shape=torch.Size([10, 3, 1]), device=cpu, dtype=torch.float32, is_shared=False)},

batch_size=torch.Size([10, 3]),

device=None,

is_shared=False)

訓練一個簡單的策略¶

在這個例子中,我們將使用獎勵作為可微分的目標來訓練一個簡單的策略,例如負損失。我們將利用我們的動態系統完全可微分的事實,透過軌跡回傳來反向傳播,並調整我們策略的權重,以直接最大化這個值。當然,在許多情況下,我們所做的許多假設並不成立,例如可微分的系統以及對底層機制的完全訪問權。

儘管如此,這是一個非常簡單的例子,展示了如何使用 TorchRL 中的自訂環境來編寫訓練迴圈。

讓我們首先編寫策略網路

torch.manual_seed(0)

env.set_seed(0)

net = nn.Sequential(

nn.LazyLinear(64),

nn.Tanh(),

nn.LazyLinear(64),

nn.Tanh(),

nn.LazyLinear(64),

nn.Tanh(),

nn.LazyLinear(1),

)

policy = TensorDictModule(

net,

in_keys=["observation"],

out_keys=["action"],

)

以及我們的最佳化器

optim = torch.optim.Adam(policy.parameters(), lr=2e-3)

訓練迴圈¶

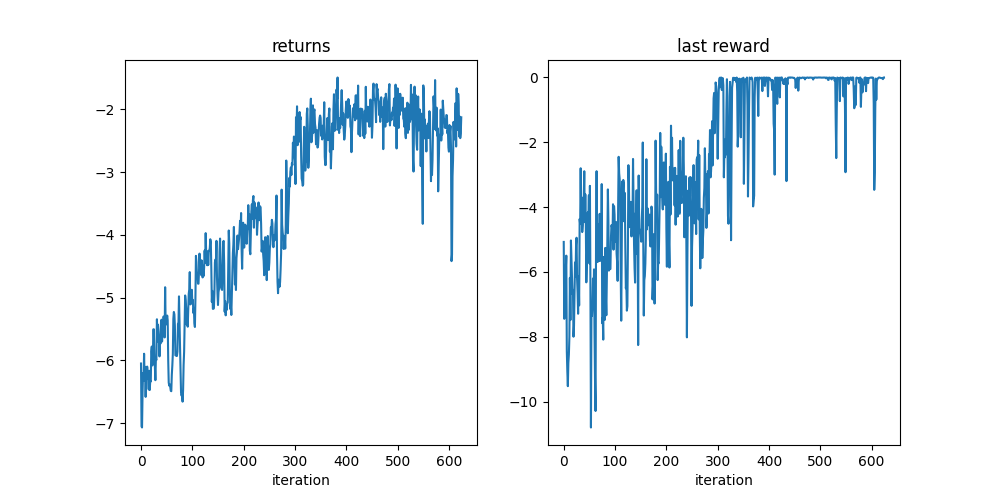

我們將依序進行

產生一個軌跡

總結獎勵

透過這些操作定義的圖進行反向傳播

剪裁梯度範數並進行最佳化步驟

重複

在訓練迴圈結束時,我們應該有一個接近 0 的最終獎勵,這表明擺錘是向上且靜止的,正如我們所期望的那樣。

batch_size = 32

pbar = tqdm.tqdm(range(20_000 // batch_size))

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optim, 20_000)

logs = defaultdict(list)

for _ in pbar:

init_td = env.reset(env.gen_params(batch_size=[batch_size]))

rollout = env.rollout(100, policy, tensordict=init_td, auto_reset=False)

traj_return = rollout["next", "reward"].mean()

(-traj_return).backward()

gn = torch.nn.utils.clip_grad_norm_(net.parameters(), 1.0)

optim.step()

optim.zero_grad()

pbar.set_description(

f"reward: {traj_return: 4.4f}, "

f"last reward: {rollout[..., -1]['next', 'reward'].mean(): 4.4f}, gradient norm: {gn: 4.4}"

)

logs["return"].append(traj_return.item())

logs["last_reward"].append(rollout[..., -1]["next", "reward"].mean().item())

scheduler.step()

def plot():

import matplotlib

from matplotlib import pyplot as plt

is_ipython = "inline" in matplotlib.get_backend()

if is_ipython:

from IPython import display

with plt.ion():

plt.figure(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.plot(logs["return"])

plt.title("returns")

plt.xlabel("iteration")

plt.subplot(1, 2, 2)

plt.plot(logs["last_reward"])

plt.title("last reward")

plt.xlabel("iteration")

if is_ipython:

display.display(plt.gcf())

display.clear_output(wait=True)

plt.show()

plot()

0%| | 0/625 [00:00<?, ?it/s]

reward: -6.0488, last reward: -5.0748, gradient norm: 8.519: 0%| | 0/625 [00:00<?, ?it/s]

reward: -6.0488, last reward: -5.0748, gradient norm: 8.519: 0%| | 1/625 [00:00<01:33, 6.69it/s]

reward: -7.0499, last reward: -7.4472, gradient norm: 5.073: 0%| | 1/625 [00:00<01:33, 6.69it/s]

reward: -7.0499, last reward: -7.4472, gradient norm: 5.073: 0%| | 2/625 [00:00<01:33, 6.63it/s]

reward: -7.0685, last reward: -7.0408, gradient norm: 5.552: 0%| | 2/625 [00:00<01:33, 6.63it/s]

reward: -7.0685, last reward: -7.0408, gradient norm: 5.552: 0%| | 3/625 [00:00<01:33, 6.63it/s]

reward: -6.5154, last reward: -5.9086, gradient norm: 2.527: 0%| | 3/625 [00:00<01:33, 6.63it/s]

reward: -6.5154, last reward: -5.9086, gradient norm: 2.527: 1%| | 4/625 [00:00<01:34, 6.60it/s]

reward: -6.2006, last reward: -5.9385, gradient norm: 8.155: 1%| | 4/625 [00:00<01:34, 6.60it/s]

reward: -6.2006, last reward: -5.9385, gradient norm: 8.155: 1%| | 5/625 [00:00<01:33, 6.60it/s]

reward: -6.2568, last reward: -5.4981, gradient norm: 6.223: 1%| | 5/625 [00:00<01:33, 6.60it/s]

reward: -6.2568, last reward: -5.4981, gradient norm: 6.223: 1%| | 6/625 [00:00<01:33, 6.62it/s]

reward: -5.8929, last reward: -8.4491, gradient norm: 4.581: 1%| | 6/625 [00:01<01:33, 6.62it/s]

reward: -5.8929, last reward: -8.4491, gradient norm: 4.581: 1%| | 7/625 [00:01<01:33, 6.63it/s]

reward: -6.3233, last reward: -9.0664, gradient norm: 7.596: 1%| | 7/625 [00:01<01:33, 6.63it/s]

reward: -6.3233, last reward: -9.0664, gradient norm: 7.596: 1%|▏ | 8/625 [00:01<01:34, 6.56it/s]

reward: -6.1021, last reward: -9.5263, gradient norm: 0.9579: 1%|▏ | 8/625 [00:01<01:34, 6.56it/s]

reward: -6.1021, last reward: -9.5263, gradient norm: 0.9579: 1%|▏ | 9/625 [00:01<01:34, 6.53it/s]

reward: -6.5807, last reward: -8.8075, gradient norm: 3.212: 1%|▏ | 9/625 [00:01<01:34, 6.53it/s]

reward: -6.5807, last reward: -8.8075, gradient norm: 3.212: 2%|▏ | 10/625 [00:01<01:33, 6.56it/s]

reward: -6.2009, last reward: -8.5525, gradient norm: 2.914: 2%|▏ | 10/625 [00:01<01:33, 6.56it/s]

reward: -6.2009, last reward: -8.5525, gradient norm: 2.914: 2%|▏ | 11/625 [00:01<01:33, 6.58it/s]

reward: -6.2894, last reward: -8.0115, gradient norm: 52.06: 2%|▏ | 11/625 [00:01<01:33, 6.58it/s]

reward: -6.2894, last reward: -8.0115, gradient norm: 52.06: 2%|▏ | 12/625 [00:01<01:32, 6.59it/s]

reward: -6.0977, last reward: -6.1845, gradient norm: 18.09: 2%|▏ | 12/625 [00:01<01:32, 6.59it/s]

reward: -6.0977, last reward: -6.1845, gradient norm: 18.09: 2%|▏ | 13/625 [00:01<01:32, 6.60it/s]

reward: -6.1830, last reward: -7.4858, gradient norm: 5.233: 2%|▏ | 13/625 [00:02<01:32, 6.60it/s]

reward: -6.1830, last reward: -7.4858, gradient norm: 5.233: 2%|▏ | 14/625 [00:02<01:32, 6.61it/s]

reward: -6.2863, last reward: -5.0297, gradient norm: 1.464: 2%|▏ | 14/625 [00:02<01:32, 6.61it/s]

reward: -6.2863, last reward: -5.0297, gradient norm: 1.464: 2%|▏ | 15/625 [00:02<01:31, 6.63it/s]

reward: -6.4617, last reward: -5.5997, gradient norm: 2.904: 2%|▏ | 15/625 [00:02<01:31, 6.63it/s]

reward: -6.4617, last reward: -5.5997, gradient norm: 2.904: 3%|▎ | 16/625 [00:02<01:31, 6.63it/s]

reward: -6.1647, last reward: -6.0777, gradient norm: 4.901: 3%|▎ | 16/625 [00:02<01:31, 6.63it/s]

reward: -6.1647, last reward: -6.0777, gradient norm: 4.901: 3%|▎ | 17/625 [00:02<01:31, 6.64it/s]

reward: -6.4709, last reward: -6.6813, gradient norm: 0.8317: 3%|▎ | 17/625 [00:02<01:31, 6.64it/s]

reward: -6.4709, last reward: -6.6813, gradient norm: 0.8317: 3%|▎ | 18/625 [00:02<01:31, 6.64it/s]

reward: -6.3221, last reward: -6.5554, gradient norm: 1.276: 3%|▎ | 18/625 [00:02<01:31, 6.64it/s]

reward: -6.3221, last reward: -6.5554, gradient norm: 1.276: 3%|▎ | 19/625 [00:02<01:31, 6.64it/s]

reward: -6.3353, last reward: -7.9999, gradient norm: 4.701: 3%|▎ | 19/625 [00:03<01:31, 6.64it/s]

reward: -6.3353, last reward: -7.9999, gradient norm: 4.701: 3%|▎ | 20/625 [00:03<01:31, 6.65it/s]

reward: -5.8570, last reward: -7.6656, gradient norm: 5.463: 3%|▎ | 20/625 [00:03<01:31, 6.65it/s]

reward: -5.8570, last reward: -7.6656, gradient norm: 5.463: 3%|▎ | 21/625 [00:03<01:30, 6.65it/s]

reward: -5.7779, last reward: -6.6911, gradient norm: 6.875: 3%|▎ | 21/625 [00:03<01:30, 6.65it/s]

reward: -5.7779, last reward: -6.6911, gradient norm: 6.875: 4%|▎ | 22/625 [00:03<01:30, 6.66it/s]

reward: -6.0796, last reward: -5.7082, gradient norm: 5.308: 4%|▎ | 22/625 [00:03<01:30, 6.66it/s]

reward: -6.0796, last reward: -5.7082, gradient norm: 5.308: 4%|▎ | 23/625 [00:03<01:30, 6.65it/s]

reward: -6.0421, last reward: -6.1496, gradient norm: 12.4: 4%|▎ | 23/625 [00:03<01:30, 6.65it/s]

reward: -6.0421, last reward: -6.1496, gradient norm: 12.4: 4%|▍ | 24/625 [00:03<01:30, 6.65it/s]

reward: -5.5037, last reward: -5.1755, gradient norm: 22.62: 4%|▍ | 24/625 [00:03<01:30, 6.65it/s]

reward: -5.5037, last reward: -5.1755, gradient norm: 22.62: 4%|▍ | 25/625 [00:03<01:30, 6.65it/s]

reward: -5.5029, last reward: -4.9454, gradient norm: 3.665: 4%|▍ | 25/625 [00:03<01:30, 6.65it/s]

reward: -5.5029, last reward: -4.9454, gradient norm: 3.665: 4%|▍ | 26/625 [00:03<01:30, 6.65it/s]

reward: -5.9330, last reward: -6.2118, gradient norm: 5.444: 4%|▍ | 26/625 [00:04<01:30, 6.65it/s]

reward: -5.9330, last reward: -6.2118, gradient norm: 5.444: 4%|▍ | 27/625 [00:04<01:29, 6.65it/s]

reward: -6.0995, last reward: -6.6294, gradient norm: 11.69: 4%|▍ | 27/625 [00:04<01:29, 6.65it/s]

reward: -6.0995, last reward: -6.6294, gradient norm: 11.69: 4%|▍ | 28/625 [00:04<01:29, 6.65it/s]

reward: -6.3146, last reward: -7.2909, gradient norm: 5.461: 4%|▍ | 28/625 [00:04<01:29, 6.65it/s]

reward: -6.3146, last reward: -7.2909, gradient norm: 5.461: 5%|▍ | 29/625 [00:04<01:29, 6.66it/s]

reward: -5.9720, last reward: -6.1298, gradient norm: 19.91: 5%|▍ | 29/625 [00:04<01:29, 6.66it/s]

reward: -5.9720, last reward: -6.1298, gradient norm: 19.91: 5%|▍ | 30/625 [00:04<01:29, 6.66it/s]

reward: -5.9923, last reward: -7.0345, gradient norm: 3.464: 5%|▍ | 30/625 [00:04<01:29, 6.66it/s]

reward: -5.9923, last reward: -7.0345, gradient norm: 3.464: 5%|▍ | 31/625 [00:04<01:29, 6.65it/s]

reward: -5.3438, last reward: -4.3688, gradient norm: 2.424: 5%|▍ | 31/625 [00:04<01:29, 6.65it/s]

reward: -5.3438, last reward: -4.3688, gradient norm: 2.424: 5%|▌ | 32/625 [00:04<01:29, 6.64it/s]

reward: -5.6953, last reward: -4.5233, gradient norm: 3.411: 5%|▌ | 32/625 [00:04<01:29, 6.64it/s]

reward: -5.6953, last reward: -4.5233, gradient norm: 3.411: 5%|▌ | 33/625 [00:04<01:29, 6.64it/s]

reward: -5.4288, last reward: -2.8011, gradient norm: 10.82: 5%|▌ | 33/625 [00:05<01:29, 6.64it/s]

reward: -5.4288, last reward: -2.8011, gradient norm: 10.82: 5%|▌ | 34/625 [00:05<01:29, 6.64it/s]

reward: -5.5329, last reward: -4.2677, gradient norm: 15.71: 5%|▌ | 34/625 [00:05<01:29, 6.64it/s]

reward: -5.5329, last reward: -4.2677, gradient norm: 15.71: 6%|▌ | 35/625 [00:05<01:28, 6.64it/s]

reward: -5.6969, last reward: -3.7010, gradient norm: 1.376: 6%|▌ | 35/625 [00:05<01:28, 6.64it/s]

reward: -5.6969, last reward: -3.7010, gradient norm: 1.376: 6%|▌ | 36/625 [00:05<01:28, 6.65it/s]

reward: -5.9352, last reward: -4.7707, gradient norm: 15.49: 6%|▌ | 36/625 [00:05<01:28, 6.65it/s]

reward: -5.9352, last reward: -4.7707, gradient norm: 15.49: 6%|▌ | 37/625 [00:05<01:28, 6.65it/s]

reward: -5.6178, last reward: -4.5646, gradient norm: 3.348: 6%|▌ | 37/625 [00:05<01:28, 6.65it/s]

reward: -5.6178, last reward: -4.5646, gradient norm: 3.348: 6%|▌ | 38/625 [00:05<01:28, 6.65it/s]

reward: -5.7304, last reward: -3.9407, gradient norm: 4.942: 6%|▌ | 38/625 [00:05<01:28, 6.65it/s]

reward: -5.7304, last reward: -3.9407, gradient norm: 4.942: 6%|▌ | 39/625 [00:05<01:28, 6.64it/s]

reward: -5.3882, last reward: -3.7604, gradient norm: 9.85: 6%|▌ | 39/625 [00:06<01:28, 6.64it/s]

reward: -5.3882, last reward: -3.7604, gradient norm: 9.85: 6%|▋ | 40/625 [00:06<01:28, 6.63it/s]

reward: -5.3507, last reward: -2.8928, gradient norm: 1.258: 6%|▋ | 40/625 [00:06<01:28, 6.63it/s]

reward: -5.3507, last reward: -2.8928, gradient norm: 1.258: 7%|▋ | 41/625 [00:06<01:27, 6.64it/s]

reward: -5.6978, last reward: -4.4641, gradient norm: 4.549: 7%|▋ | 41/625 [00:06<01:27, 6.64it/s]

reward: -5.6978, last reward: -4.4641, gradient norm: 4.549: 7%|▋ | 42/625 [00:06<01:27, 6.64it/s]

reward: -5.5263, last reward: -3.6047, gradient norm: 2.544: 7%|▋ | 42/625 [00:06<01:27, 6.64it/s]

reward: -5.5263, last reward: -3.6047, gradient norm: 2.544: 7%|▋ | 43/625 [00:06<01:27, 6.64it/s]

reward: -5.5005, last reward: -4.4136, gradient norm: 11.49: 7%|▋ | 43/625 [00:06<01:27, 6.64it/s]

reward: -5.5005, last reward: -4.4136, gradient norm: 11.49: 7%|▋ | 44/625 [00:06<01:27, 6.64it/s]

reward: -5.2993, last reward: -6.3222, gradient norm: 32.53: 7%|▋ | 44/625 [00:06<01:27, 6.64it/s]

reward: -5.2993, last reward: -6.3222, gradient norm: 32.53: 7%|▋ | 45/625 [00:06<01:27, 6.65it/s]

reward: -5.4046, last reward: -5.7314, gradient norm: 7.275: 7%|▋ | 45/625 [00:06<01:27, 6.65it/s]

reward: -5.4046, last reward: -5.7314, gradient norm: 7.275: 7%|▋ | 46/625 [00:06<01:27, 6.65it/s]

reward: -5.6331, last reward: -4.9318, gradient norm: 6.961: 7%|▋ | 46/625 [00:07<01:27, 6.65it/s]

reward: -5.6331, last reward: -4.9318, gradient norm: 6.961: 8%|▊ | 47/625 [00:07<01:26, 6.65it/s]

reward: -4.8331, last reward: -4.1604, gradient norm: 26.26: 8%|▊ | 47/625 [00:07<01:26, 6.65it/s]

reward: -4.8331, last reward: -4.1604, gradient norm: 26.26: 8%|▊ | 48/625 [00:07<01:26, 6.66it/s]

reward: -5.4099, last reward: -4.4761, gradient norm: 8.125: 8%|▊ | 48/625 [00:07<01:26, 6.66it/s]

reward: -5.4099, last reward: -4.4761, gradient norm: 8.125: 8%|▊ | 49/625 [00:07<01:26, 6.65it/s]

reward: -5.4262, last reward: -3.6363, gradient norm: 2.382: 8%|▊ | 49/625 [00:07<01:26, 6.65it/s]

reward: -5.4262, last reward: -3.6363, gradient norm: 2.382: 8%|▊ | 50/625 [00:07<01:26, 6.65it/s]

reward: -5.3593, last reward: -5.7377, gradient norm: 22.62: 8%|▊ | 50/625 [00:07<01:26, 6.65it/s]

reward: -5.3593, last reward: -5.7377, gradient norm: 22.62: 8%|▊ | 51/625 [00:07<01:26, 6.64it/s]

reward: -5.2847, last reward: -3.3443, gradient norm: 2.867: 8%|▊ | 51/625 [00:07<01:26, 6.64it/s]

reward: -5.2847, last reward: -3.3443, gradient norm: 2.867: 8%|▊ | 52/625 [00:07<01:26, 6.64it/s]

reward: -5.3592, last reward: -6.4760, gradient norm: 8.441: 8%|▊ | 52/625 [00:07<01:26, 6.64it/s]

reward: -5.3592, last reward: -6.4760, gradient norm: 8.441: 8%|▊ | 53/625 [00:07<01:26, 6.64it/s]

reward: -5.9950, last reward: -10.8021, gradient norm: 11.77: 8%|▊ | 53/625 [00:08<01:26, 6.64it/s]

reward: -5.9950, last reward: -10.8021, gradient norm: 11.77: 9%|▊ | 54/625 [00:08<01:25, 6.65it/s]

reward: -6.3528, last reward: -7.1214, gradient norm: 7.708: 9%|▊ | 54/625 [00:08<01:25, 6.65it/s]

reward: -6.3528, last reward: -7.1214, gradient norm: 7.708: 9%|▉ | 55/625 [00:08<01:25, 6.64it/s]

reward: -6.4023, last reward: -7.3583, gradient norm: 9.041: 9%|▉ | 55/625 [00:08<01:25, 6.64it/s]

reward: -6.4023, last reward: -7.3583, gradient norm: 9.041: 9%|▉ | 56/625 [00:08<01:25, 6.64it/s]

reward: -6.3801, last reward: -7.0310, gradient norm: 120.1: 9%|▉ | 56/625 [00:08<01:25, 6.64it/s]

reward: -6.3801, last reward: -7.0310, gradient norm: 120.1: 9%|▉ | 57/625 [00:08<01:25, 6.64it/s]

reward: -6.4244, last reward: -6.2039, gradient norm: 15.48: 9%|▉ | 57/625 [00:08<01:25, 6.64it/s]

reward: -6.4244, last reward: -6.2039, gradient norm: 15.48: 9%|▉ | 58/625 [00:08<01:25, 6.64it/s]

reward: -6.4850, last reward: -6.8748, gradient norm: 4.706: 9%|▉ | 58/625 [00:08<01:25, 6.64it/s]

reward: -6.4850, last reward: -6.8748, gradient norm: 4.706: 9%|▉ | 59/625 [00:08<01:25, 6.63it/s]

reward: -6.4897, last reward: -5.9210, gradient norm: 11.63: 9%|▉ | 59/625 [00:09<01:25, 6.63it/s]

reward: -6.4897, last reward: -5.9210, gradient norm: 11.63: 10%|▉ | 60/625 [00:09<01:25, 6.64it/s]

reward: -6.2299, last reward: -7.8964, gradient norm: 13.35: 10%|▉ | 60/625 [00:09<01:25, 6.64it/s]

reward: -6.2299, last reward: -7.8964, gradient norm: 13.35: 10%|▉ | 61/625 [00:09<01:24, 6.65it/s]

reward: -6.0832, last reward: -9.3934, gradient norm: 4.456: 10%|▉ | 61/625 [00:09<01:24, 6.65it/s]

reward: -6.0832, last reward: -9.3934, gradient norm: 4.456: 10%|▉ | 62/625 [00:09<01:24, 6.65it/s]

reward: -5.8971, last reward: -10.2933, gradient norm: 10.74: 10%|▉ | 62/625 [00:09<01:24, 6.65it/s]

reward: -5.8971, last reward: -10.2933, gradient norm: 10.74: 10%|█ | 63/625 [00:09<01:24, 6.64it/s]

reward: -5.3377, last reward: -4.6996, gradient norm: 23.29: 10%|█ | 63/625 [00:09<01:24, 6.64it/s]

reward: -5.3377, last reward: -4.6996, gradient norm: 23.29: 10%|█ | 64/625 [00:09<01:24, 6.63it/s]

reward: -5.2274, last reward: -2.8916, gradient norm: 4.098: 10%|█ | 64/625 [00:09<01:24, 6.63it/s]

reward: -5.2274, last reward: -2.8916, gradient norm: 4.098: 10%|█ | 65/625 [00:09<01:24, 6.64it/s]

reward: -5.2660, last reward: -4.9110, gradient norm: 12.28: 10%|█ | 65/625 [00:09<01:24, 6.64it/s]

reward: -5.2660, last reward: -4.9110, gradient norm: 12.28: 11%|█ | 66/625 [00:09<01:24, 6.64it/s]

reward: -5.4503, last reward: -5.6956, gradient norm: 12.22: 11%|█ | 66/625 [00:10<01:24, 6.64it/s]

reward: -5.4503, last reward: -5.6956, gradient norm: 12.22: 11%|█ | 67/625 [00:10<01:23, 6.65it/s]

reward: -5.9172, last reward: -5.4026, gradient norm: 7.946: 11%|█ | 67/625 [00:10<01:23, 6.65it/s]

reward: -5.9172, last reward: -5.4026, gradient norm: 7.946: 11%|█ | 68/625 [00:10<01:23, 6.65it/s]

reward: -5.9229, last reward: -4.5205, gradient norm: 6.294: 11%|█ | 68/625 [00:10<01:23, 6.65it/s]

reward: -5.9229, last reward: -4.5205, gradient norm: 6.294: 11%|█ | 69/625 [00:10<01:23, 6.65it/s]

reward: -5.8872, last reward: -5.6637, gradient norm: 8.019: 11%|█ | 69/625 [00:10<01:23, 6.65it/s]

reward: -5.8872, last reward: -5.6637, gradient norm: 8.019: 11%|█ | 70/625 [00:10<01:23, 6.64it/s]

reward: -5.9281, last reward: -4.2082, gradient norm: 5.724: 11%|█ | 70/625 [00:10<01:23, 6.64it/s]

reward: -5.9281, last reward: -4.2082, gradient norm: 5.724: 11%|█▏ | 71/625 [00:10<01:23, 6.63it/s]

reward: -5.8561, last reward: -5.6574, gradient norm: 8.357: 11%|█▏ | 71/625 [00:10<01:23, 6.63it/s]

reward: -5.8561, last reward: -5.6574, gradient norm: 8.357: 12%|█▏ | 72/625 [00:10<01:23, 6.62it/s]

reward: -5.4138, last reward: -4.5230, gradient norm: 7.385: 12%|█▏ | 72/625 [00:11<01:23, 6.62it/s]

reward: -5.4138, last reward: -4.5230, gradient norm: 7.385: 12%|█▏ | 73/625 [00:11<01:23, 6.61it/s]

reward: -5.4065, last reward: -5.5642, gradient norm: 9.921: 12%|█▏ | 73/625 [00:11<01:23, 6.61it/s]

reward: -5.4065, last reward: -5.5642, gradient norm: 9.921: 12%|█▏ | 74/625 [00:11<01:23, 6.62it/s]

reward: -4.9786, last reward: -3.2894, gradient norm: 32.73: 12%|█▏ | 74/625 [00:11<01:23, 6.62it/s]

reward: -4.9786, last reward: -3.2894, gradient norm: 32.73: 12%|█▏ | 75/625 [00:11<01:23, 6.63it/s]

reward: -5.4129, last reward: -7.5831, gradient norm: 9.266: 12%|█▏ | 75/625 [00:11<01:23, 6.63it/s]

reward: -5.4129, last reward: -7.5831, gradient norm: 9.266: 12%|█▏ | 76/625 [00:11<01:22, 6.62it/s]

reward: -5.7723, last reward: -7.4152, gradient norm: 5.608: 12%|█▏ | 76/625 [00:11<01:22, 6.62it/s]

reward: -5.7723, last reward: -7.4152, gradient norm: 5.608: 12%|█▏ | 77/625 [00:11<01:22, 6.63it/s]

reward: -6.1604, last reward: -8.0898, gradient norm: 4.389: 12%|█▏ | 77/625 [00:11<01:22, 6.63it/s]

reward: -6.1604, last reward: -8.0898, gradient norm: 4.389: 12%|█▏ | 78/625 [00:11<01:22, 6.63it/s]

reward: -6.5155, last reward: -5.5376, gradient norm: 36.34: 12%|█▏ | 78/625 [00:11<01:22, 6.63it/s]

reward: -6.5155, last reward: -5.5376, gradient norm: 36.34: 13%|█▎ | 79/625 [00:11<01:22, 6.61it/s]

reward: -6.5616, last reward: -6.4094, gradient norm: 8.283: 13%|█▎ | 79/625 [00:12<01:22, 6.61it/s]

reward: -6.5616, last reward: -6.4094, gradient norm: 8.283: 13%|█▎ | 80/625 [00:12<01:22, 6.61it/s]

reward: -6.5333, last reward: -7.4803, gradient norm: 5.895: 13%|█▎ | 80/625 [00:12<01:22, 6.61it/s]

reward: -6.5333, last reward: -7.4803, gradient norm: 5.895: 13%|█▎ | 81/625 [00:12<01:22, 6.62it/s]

reward: -6.6566, last reward: -5.2588, gradient norm: 7.662: 13%|█▎ | 81/625 [00:12<01:22, 6.62it/s]

reward: -6.6566, last reward: -5.2588, gradient norm: 7.662: 13%|█▎ | 82/625 [00:12<01:21, 6.62it/s]

reward: -6.4732, last reward: -6.7503, gradient norm: 6.068: 13%|█▎ | 82/625 [00:12<01:21, 6.62it/s]

reward: -6.4732, last reward: -6.7503, gradient norm: 6.068: 13%|█▎ | 83/625 [00:12<01:22, 6.60it/s]

reward: -6.0714, last reward: -7.3370, gradient norm: 8.059: 13%|█▎ | 83/625 [00:12<01:22, 6.60it/s]

reward: -6.0714, last reward: -7.3370, gradient norm: 8.059: 13%|█▎ | 84/625 [00:12<01:21, 6.61it/s]

reward: -5.8612, last reward: -6.1915, gradient norm: 9.3: 13%|█▎ | 84/625 [00:12<01:21, 6.61it/s]

reward: -5.8612, last reward: -6.1915, gradient norm: 9.3: 14%|█▎ | 85/625 [00:12<01:21, 6.61it/s]

reward: -5.3855, last reward: -5.0349, gradient norm: 15.2: 14%|█▎ | 85/625 [00:12<01:21, 6.61it/s]

reward: -5.3855, last reward: -5.0349, gradient norm: 15.2: 14%|█▍ | 86/625 [00:12<01:21, 6.61it/s]

reward: -4.9644, last reward: -3.4538, gradient norm: 3.445: 14%|█▍ | 86/625 [00:13<01:21, 6.61it/s]

reward: -4.9644, last reward: -3.4538, gradient norm: 3.445: 14%|█▍ | 87/625 [00:13<01:21, 6.62it/s]

reward: -5.0392, last reward: -4.4080, gradient norm: 11.45: 14%|█▍ | 87/625 [00:13<01:21, 6.62it/s]

reward: -5.0392, last reward: -4.4080, gradient norm: 11.45: 14%|█▍ | 88/625 [00:13<01:21, 6.62it/s]

reward: -5.1648, last reward: -5.9599, gradient norm: 143.4: 14%|█▍ | 88/625 [00:13<01:21, 6.62it/s]

reward: -5.1648, last reward: -5.9599, gradient norm: 143.4: 14%|█▍ | 89/625 [00:13<01:20, 6.62it/s]

reward: -5.4284, last reward: -5.5946, gradient norm: 10.3: 14%|█▍ | 89/625 [00:13<01:20, 6.62it/s]

reward: -5.4284, last reward: -5.5946, gradient norm: 10.3: 14%|█▍ | 90/625 [00:13<01:20, 6.63it/s]

reward: -5.2590, last reward: -5.9181, gradient norm: 11.15: 14%|█▍ | 90/625 [00:13<01:20, 6.63it/s]

reward: -5.2590, last reward: -5.9181, gradient norm: 11.15: 15%|█▍ | 91/625 [00:13<01:20, 6.63it/s]

reward: -5.4621, last reward: -5.9075, gradient norm: 8.674: 15%|█▍ | 91/625 [00:13<01:20, 6.63it/s]

reward: -5.4621, last reward: -5.9075, gradient norm: 8.674: 15%|█▍ | 92/625 [00:13<01:20, 6.62it/s]

reward: -5.1772, last reward: -4.9444, gradient norm: 8.351: 15%|█▍ | 92/625 [00:14<01:20, 6.62it/s]

reward: -5.1772, last reward: -4.9444, gradient norm: 8.351: 15%|█▍ | 93/625 [00:14<01:20, 6.63it/s]

reward: -4.9391, last reward: -4.5595, gradient norm: 8.1: 15%|█▍ | 93/625 [00:14<01:20, 6.63it/s]

reward: -4.9391, last reward: -4.5595, gradient norm: 8.1: 15%|█▌ | 94/625 [00:14<01:20, 6.61it/s]

reward: -4.8673, last reward: -4.6240, gradient norm: 14.43: 15%|█▌ | 94/625 [00:14<01:20, 6.61it/s]

reward: -4.8673, last reward: -4.6240, gradient norm: 14.43: 15%|█▌ | 95/625 [00:14<01:20, 6.61it/s]

reward: -4.5919, last reward: -5.0018, gradient norm: 26.09: 15%|█▌ | 95/625 [00:14<01:20, 6.61it/s]

reward: -4.5919, last reward: -5.0018, gradient norm: 26.09: 15%|█▌ | 96/625 [00:14<01:20, 6.61it/s]

reward: -5.1071, last reward: -3.9127, gradient norm: 2.251: 15%|█▌ | 96/625 [00:14<01:20, 6.61it/s]

reward: -5.1071, last reward: -3.9127, gradient norm: 2.251: 16%|█▌ | 97/625 [00:14<01:19, 6.62it/s]

reward: -4.9799, last reward: -5.3131, gradient norm: 19.65: 16%|█▌ | 97/625 [00:14<01:19, 6.62it/s]

reward: -4.9799, last reward: -5.3131, gradient norm: 19.65: 16%|█▌ | 98/625 [00:14<01:19, 6.63it/s]

reward: -4.9612, last reward: -3.9705, gradient norm: 12.55: 16%|█▌ | 98/625 [00:14<01:19, 6.63it/s]

reward: -4.9612, last reward: -3.9705, gradient norm: 12.55: 16%|█▌ | 99/625 [00:14<01:19, 6.61it/s]

reward: -4.8741, last reward: -4.2230, gradient norm: 6.19: 16%|█▌ | 99/625 [00:15<01:19, 6.61it/s]

reward: -4.8741, last reward: -4.2230, gradient norm: 6.19: 16%|█▌ | 100/625 [00:15<01:19, 6.62it/s]

reward: -5.0972, last reward: -5.0337, gradient norm: 11.86: 16%|█▌ | 100/625 [00:15<01:19, 6.62it/s]

reward: -5.0972, last reward: -5.0337, gradient norm: 11.86: 16%|█▌ | 101/625 [00:15<01:19, 6.62it/s]

reward: -5.0350, last reward: -5.0654, gradient norm: 10.83: 16%|█▌ | 101/625 [00:15<01:19, 6.62it/s]

reward: -5.0350, last reward: -5.0654, gradient norm: 10.83: 16%|█▋ | 102/625 [00:15<01:18, 6.62it/s]

reward: -5.2441, last reward: -4.4596, gradient norm: 7.362: 16%|█▋ | 102/625 [00:15<01:18, 6.62it/s]

reward: -5.2441, last reward: -4.4596, gradient norm: 7.362: 16%|█▋ | 103/625 [00:15<01:18, 6.63it/s]

reward: -5.1664, last reward: -5.4362, gradient norm: 8.171: 16%|█▋ | 103/625 [00:15<01:18, 6.63it/s]

reward: -5.1664, last reward: -5.4362, gradient norm: 8.171: 17%|█▋ | 104/625 [00:15<01:18, 6.61it/s]

reward: -5.4041, last reward: -5.6907, gradient norm: 7.77: 17%|█▋ | 104/625 [00:15<01:18, 6.61it/s]

reward: -5.4041, last reward: -5.6907, gradient norm: 7.77: 17%|█▋ | 105/625 [00:15<01:18, 6.58it/s]

reward: -5.4664, last reward: -6.2760, gradient norm: 11.19: 17%|█▋ | 105/625 [00:15<01:18, 6.58it/s]

reward: -5.4664, last reward: -6.2760, gradient norm: 11.19: 17%|█▋ | 106/625 [00:15<01:18, 6.57it/s]

reward: -5.0299, last reward: -3.9712, gradient norm: 9.349: 17%|█▋ | 106/625 [00:16<01:18, 6.57it/s]

reward: -5.0299, last reward: -3.9712, gradient norm: 9.349: 17%|█▋ | 107/625 [00:16<01:18, 6.58it/s]

reward: -4.3332, last reward: -2.4479, gradient norm: 5.772: 17%|█▋ | 107/625 [00:16<01:18, 6.58it/s]

reward: -4.3332, last reward: -2.4479, gradient norm: 5.772: 17%|█▋ | 108/625 [00:16<01:18, 6.60it/s]

reward: -4.4357, last reward: -2.9591, gradient norm: 4.543: 17%|█▋ | 108/625 [00:16<01:18, 6.60it/s]

reward: -4.4357, last reward: -2.9591, gradient norm: 4.543: 17%|█▋ | 109/625 [00:16<01:18, 6.58it/s]

reward: -4.6216, last reward: -3.1353, gradient norm: 4.692: 17%|█▋ | 109/625 [00:16<01:18, 6.58it/s]

reward: -4.6216, last reward: -3.1353, gradient norm: 4.692: 18%|█▊ | 110/625 [00:16<01:18, 6.60it/s]

reward: -4.6261, last reward: -3.7086, gradient norm: 4.496: 18%|█▊ | 110/625 [00:16<01:18, 6.60it/s]

reward: -4.6261, last reward: -3.7086, gradient norm: 4.496: 18%|█▊ | 111/625 [00:16<01:17, 6.60it/s]

reward: -4.7758, last reward: -5.9818, gradient norm: 21.71: 18%|█▊ | 111/625 [00:16<01:17, 6.60it/s]

reward: -4.7758, last reward: -5.9818, gradient norm: 21.71: 18%|█▊ | 112/625 [00:16<01:17, 6.59it/s]

reward: -4.7772, last reward: -7.5055, gradient norm: 62.86: 18%|█▊ | 112/625 [00:17<01:17, 6.59it/s]

reward: -4.7772, last reward: -7.5055, gradient norm: 62.86: 18%|█▊ | 113/625 [00:17<01:17, 6.60it/s]

reward: -4.5840, last reward: -5.3180, gradient norm: 18.74: 18%|█▊ | 113/625 [00:17<01:17, 6.60it/s]

reward: -4.5840, last reward: -5.3180, gradient norm: 18.74: 18%|█▊ | 114/625 [00:17<01:17, 6.62it/s]

reward: -4.2976, last reward: -3.2083, gradient norm: 10.63: 18%|█▊ | 114/625 [00:17<01:17, 6.62it/s]

reward: -4.2976, last reward: -3.2083, gradient norm: 10.63: 18%|█▊ | 115/625 [00:17<01:17, 6.62it/s]

reward: -4.5275, last reward: -3.6873, gradient norm: 15.65: 18%|█▊ | 115/625 [00:17<01:17, 6.62it/s]

reward: -4.5275, last reward: -3.6873, gradient norm: 15.65: 19%|█▊ | 116/625 [00:17<01:16, 6.63it/s]

reward: -4.4107, last reward: -3.1624, gradient norm: 19.7: 19%|█▊ | 116/625 [00:17<01:16, 6.63it/s]

reward: -4.4107, last reward: -3.1624, gradient norm: 19.7: 19%|█▊ | 117/625 [00:17<01:16, 6.63it/s]

reward: -4.6372, last reward: -3.2571, gradient norm: 15.83: 19%|█▊ | 117/625 [00:17<01:16, 6.63it/s]

reward: -4.6372, last reward: -3.2571, gradient norm: 15.83: 19%|█▉ | 118/625 [00:17<01:16, 6.62it/s]

reward: -4.4039, last reward: -4.4428, gradient norm: 13.06: 19%|█▉ | 118/625 [00:17<01:16, 6.62it/s]

reward: -4.4039, last reward: -4.4428, gradient norm: 13.06: 19%|█▉ | 119/625 [00:17<01:16, 6.63it/s]

reward: -4.4728, last reward: -3.5628, gradient norm: 12.04: 19%|█▉ | 119/625 [00:18<01:16, 6.63it/s]

reward: -4.4728, last reward: -3.5628, gradient norm: 12.04: 19%|█▉ | 120/625 [00:18<01:16, 6.61it/s]

reward: -4.6767, last reward: -5.2466, gradient norm: 6.522: 19%|█▉ | 120/625 [00:18<01:16, 6.61it/s]

reward: -4.6767, last reward: -5.2466, gradient norm: 6.522: 19%|█▉ | 121/625 [00:18<01:16, 6.60it/s]

reward: -4.5873, last reward: -6.5072, gradient norm: 19.21: 19%|█▉ | 121/625 [00:18<01:16, 6.60it/s]

reward: -4.5873, last reward: -6.5072, gradient norm: 19.21: 20%|█▉ | 122/625 [00:18<01:16, 6.60it/s]

reward: -4.6548, last reward: -6.3766, gradient norm: 5.692: 20%|█▉ | 122/625 [00:18<01:16, 6.60it/s]

reward: -4.6548, last reward: -6.3766, gradient norm: 5.692: 20%|█▉ | 123/625 [00:18<01:16, 6.60it/s]

reward: -4.5134, last reward: -7.1955, gradient norm: 11.11: 20%|█▉ | 123/625 [00:18<01:16, 6.60it/s]

reward: -4.5134, last reward: -7.1955, gradient norm: 11.11: 20%|█▉ | 124/625 [00:18<01:15, 6.62it/s]

reward: -4.2481, last reward: -7.0591, gradient norm: 11.85: 20%|█▉ | 124/625 [00:18<01:15, 6.62it/s]

reward: -4.2481, last reward: -7.0591, gradient norm: 11.85: 20%|██ | 125/625 [00:18<01:15, 6.60it/s]

reward: -4.4500, last reward: -5.3368, gradient norm: 10.19: 20%|██ | 125/625 [00:19<01:15, 6.60it/s]

reward: -4.4500, last reward: -5.3368, gradient norm: 10.19: 20%|██ | 126/625 [00:19<01:15, 6.60it/s]

reward: -3.9708, last reward: -2.7059, gradient norm: 42.81: 20%|██ | 126/625 [00:19<01:15, 6.60it/s]

reward: -3.9708, last reward: -2.7059, gradient norm: 42.81: 20%|██ | 127/625 [00:19<01:15, 6.61it/s]

reward: -4.3031, last reward: -3.2534, gradient norm: 4.843: 20%|██ | 127/625 [00:19<01:15, 6.61it/s]

reward: -4.3031, last reward: -3.2534, gradient norm: 4.843: 20%|██ | 128/625 [00:19<01:15, 6.61it/s]

reward: -4.3327, last reward: -4.6193, gradient norm: 20.96: 20%|██ | 128/625 [00:19<01:15, 6.61it/s]

reward: -4.3327, last reward: -4.6193, gradient norm: 20.96: 21%|██ | 129/625 [00:19<01:15, 6.61it/s]

reward: -4.4831, last reward: -4.1172, gradient norm: 24.81: 21%|██ | 129/625 [00:19<01:15, 6.61it/s]

reward: -4.4831, last reward: -4.1172, gradient norm: 24.81: 21%|██ | 130/625 [00:19<01:14, 6.61it/s]

reward: -4.2593, last reward: -4.4219, gradient norm: 5.962: 21%|██ | 130/625 [00:19<01:14, 6.61it/s]

reward: -4.2593, last reward: -4.4219, gradient norm: 5.962: 21%|██ | 131/625 [00:19<01:14, 6.61it/s]

reward: -4.4800, last reward: -3.8380, gradient norm: 2.899: 21%|██ | 131/625 [00:19<01:14, 6.61it/s]

reward: -4.4800, last reward: -3.8380, gradient norm: 2.899: 21%|██ | 132/625 [00:19<01:14, 6.59it/s]

reward: -4.2721, last reward: -4.9048, gradient norm: 7.166: 21%|██ | 132/625 [00:20<01:14, 6.59it/s]

reward: -4.2721, last reward: -4.9048, gradient norm: 7.166: 21%|██▏ | 133/625 [00:20<01:14, 6.59it/s]

reward: -4.2419, last reward: -4.5248, gradient norm: 25.93: 21%|██▏ | 133/625 [00:20<01:14, 6.59it/s]

reward: -4.2419, last reward: -4.5248, gradient norm: 25.93: 21%|██▏ | 134/625 [00:20<01:14, 6.60it/s]

reward: -4.2139, last reward: -4.4278, gradient norm: 20.26: 21%|██▏ | 134/625 [00:20<01:14, 6.60it/s]

reward: -4.2139, last reward: -4.4278, gradient norm: 20.26: 22%|██▏ | 135/625 [00:20<01:14, 6.62it/s]

reward: -4.0690, last reward: -2.5140, gradient norm: 22.5: 22%|██▏ | 135/625 [00:20<01:14, 6.62it/s]

reward: -4.0690, last reward: -2.5140, gradient norm: 22.5: 22%|██▏ | 136/625 [00:20<01:13, 6.62it/s]

reward: -4.1140, last reward: -3.7402, gradient norm: 11.11: 22%|██▏ | 136/625 [00:20<01:13, 6.62it/s]

reward: -4.1140, last reward: -3.7402, gradient norm: 11.11: 22%|██▏ | 137/625 [00:20<01:13, 6.62it/s]

reward: -4.5356, last reward: -5.1636, gradient norm: 400.1: 22%|██▏ | 137/625 [00:20<01:13, 6.62it/s]

reward: -4.5356, last reward: -5.1636, gradient norm: 400.1: 22%|██▏ | 138/625 [00:20<01:13, 6.60it/s]

reward: -5.0671, last reward: -5.8798, gradient norm: 13.34: 22%|██▏ | 138/625 [00:20<01:13, 6.60it/s]

reward: -5.0671, last reward: -5.8798, gradient norm: 13.34: 22%|██▏ | 139/625 [00:20<01:13, 6.62it/s]

reward: -4.8918, last reward: -6.3298, gradient norm: 7.307: 22%|██▏ | 139/625 [00:21<01:13, 6.62it/s]

reward: -4.8918, last reward: -6.3298, gradient norm: 7.307: 22%|██▏ | 140/625 [00:21<01:13, 6.62it/s]

reward: -5.1779, last reward: -4.1915, gradient norm: 11.43: 22%|██▏ | 140/625 [00:21<01:13, 6.62it/s]

reward: -5.1779, last reward: -4.1915, gradient norm: 11.43: 23%|██▎ | 141/625 [00:21<01:13, 6.62it/s]

reward: -5.1771, last reward: -4.3624, gradient norm: 6.936: 23%|██▎ | 141/625 [00:21<01:13, 6.62it/s]

reward: -5.1771, last reward: -4.3624, gradient norm: 6.936: 23%|██▎ | 142/625 [00:21<01:12, 6.63it/s]

reward: -5.1683, last reward: -3.4810, gradient norm: 13.29: 23%|██▎ | 142/625 [00:21<01:12, 6.63it/s]

reward: -5.1683, last reward: -3.4810, gradient norm: 13.29: 23%|██▎ | 143/625 [00:21<01:13, 6.60it/s]

reward: -4.9373, last reward: -5.4435, gradient norm: 19.33: 23%|██▎ | 143/625 [00:21<01:13, 6.60it/s]

reward: -4.9373, last reward: -5.4435, gradient norm: 19.33: 23%|██▎ | 144/625 [00:21<01:12, 6.61it/s]

reward: -4.4396, last reward: -4.8092, gradient norm: 118.9: 23%|██▎ | 144/625 [00:21<01:12, 6.61it/s]

reward: -4.4396, last reward: -4.8092, gradient norm: 118.9: 23%|██▎ | 145/625 [00:21<01:12, 6.60it/s]

reward: -4.3911, last reward: -8.2572, gradient norm: 15.04: 23%|██▎ | 145/625 [00:22<01:12, 6.60it/s]

reward: -4.3911, last reward: -8.2572, gradient norm: 15.04: 23%|██▎ | 146/625 [00:22<01:12, 6.62it/s]

reward: -4.4212, last reward: -3.0260, gradient norm: 26.01: 23%|██▎ | 146/625 [00:22<01:12, 6.62it/s]

reward: -4.4212, last reward: -3.0260, gradient norm: 26.01: 24%|██▎ | 147/625 [00:22<01:12, 6.62it/s]

reward: -4.0939, last reward: -4.6478, gradient norm: 9.605: 24%|██▎ | 147/625 [00:22<01:12, 6.62it/s]

reward: -4.0939, last reward: -4.6478, gradient norm: 9.605: 24%|██▎ | 148/625 [00:22<01:12, 6.62it/s]

reward: -4.6606, last reward: -4.7289, gradient norm: 11.19: 24%|██▎ | 148/625 [00:22<01:12, 6.62it/s]

reward: -4.6606, last reward: -4.7289, gradient norm: 11.19: 24%|██▍ | 149/625 [00:22<01:11, 6.63it/s]

reward: -4.9300, last reward: -4.7193, gradient norm: 8.563: 24%|██▍ | 149/625 [00:22<01:11, 6.63it/s]

reward: -4.9300, last reward: -4.7193, gradient norm: 8.563: 24%|██▍ | 150/625 [00:22<01:11, 6.63it/s]

reward: -5.1166, last reward: -4.8514, gradient norm: 8.384: 24%|██▍ | 150/625 [00:22<01:11, 6.63it/s]

reward: -5.1166, last reward: -4.8514, gradient norm: 8.384: 24%|██▍ | 151/625 [00:22<01:11, 6.63it/s]

reward: -4.9108, last reward: -5.0672, gradient norm: 9.292: 24%|██▍ | 151/625 [00:22<01:11, 6.63it/s]

reward: -4.9108, last reward: -5.0672, gradient norm: 9.292: 24%|██▍ | 152/625 [00:22<01:11, 6.63it/s]

reward: -4.8591, last reward: -4.3768, gradient norm: 9.72: 24%|██▍ | 152/625 [00:23<01:11, 6.63it/s]

reward: -4.8591, last reward: -4.3768, gradient norm: 9.72: 24%|██▍ | 153/625 [00:23<01:11, 6.63it/s]

reward: -4.2721, last reward: -3.9976, gradient norm: 10.37: 24%|██▍ | 153/625 [00:23<01:11, 6.63it/s]

reward: -4.2721, last reward: -3.9976, gradient norm: 10.37: 25%|██▍ | 154/625 [00:23<01:11, 6.63it/s]

reward: -4.0576, last reward: -2.0067, gradient norm: 8.935: 25%|██▍ | 154/625 [00:23<01:11, 6.63it/s]

reward: -4.0576, last reward: -2.0067, gradient norm: 8.935: 25%|██▍ | 155/625 [00:23<01:10, 6.64it/s]

reward: -4.4199, last reward: -5.1722, gradient norm: 18.7: 25%|██▍ | 155/625 [00:23<01:10, 6.64it/s]

reward: -4.4199, last reward: -5.1722, gradient norm: 18.7: 25%|██▍ | 156/625 [00:23<01:10, 6.64it/s]

reward: -4.8310, last reward: -7.3466, gradient norm: 28.52: 25%|██▍ | 156/625 [00:23<01:10, 6.64it/s]

reward: -4.8310, last reward: -7.3466, gradient norm: 28.52: 25%|██▌ | 157/625 [00:23<01:10, 6.64it/s]

reward: -4.8631, last reward: -6.2492, gradient norm: 89.17: 25%|██▌ | 157/625 [00:23<01:10, 6.64it/s]

reward: -4.8631, last reward: -6.2492, gradient norm: 89.17: 25%|██▌ | 158/625 [00:23<01:10, 6.64it/s]

reward: -4.8763, last reward: -6.1277, gradient norm: 24.43: 25%|██▌ | 158/625 [00:24<01:10, 6.64it/s]

reward: -4.8763, last reward: -6.1277, gradient norm: 24.43: 25%|██▌ | 159/625 [00:24<01:10, 6.64it/s]

reward: -4.5562, last reward: -5.7446, gradient norm: 23.35: 25%|██▌ | 159/625 [00:24<01:10, 6.64it/s]

reward: -4.5562, last reward: -5.7446, gradient norm: 23.35: 26%|██▌ | 160/625 [00:24<01:10, 6.64it/s]

reward: -4.1082, last reward: -4.9830, gradient norm: 22.14: 26%|██▌ | 160/625 [00:24<01:10, 6.64it/s]

reward: -4.1082, last reward: -4.9830, gradient norm: 22.14: 26%|██▌ | 161/625 [00:24<01:09, 6.64it/s]

reward: -4.0946, last reward: -2.5229, gradient norm: 10.47: 26%|██▌ | 161/625 [00:24<01:09, 6.64it/s]

reward: -4.0946, last reward: -2.5229, gradient norm: 10.47: 26%|██▌ | 162/625 [00:24<01:09, 6.64it/s]

reward: -4.4574, last reward: -4.6900, gradient norm: 112.6: 26%|██▌ | 162/625 [00:24<01:09, 6.64it/s]

reward: -4.4574, last reward: -4.6900, gradient norm: 112.6: 26%|██▌ | 163/625 [00:24<01:09, 6.63it/s]

reward: -5.2229, last reward: -4.0318, gradient norm: 6.482: 26%|██▌ | 163/625 [00:24<01:09, 6.63it/s]

reward: -5.2229, last reward: -4.0318, gradient norm: 6.482: 26%|██▌ | 164/625 [00:24<01:09, 6.64it/s]

reward: -5.0543, last reward: -4.0817, gradient norm: 5.761: 26%|██▌ | 164/625 [00:24<01:09, 6.64it/s]

reward: -5.0543, last reward: -4.0817, gradient norm: 5.761: 26%|██▋ | 165/625 [00:24<01:09, 6.64it/s]

reward: -5.2809, last reward: -4.5118, gradient norm: 5.366: 26%|██▋ | 165/625 [00:25<01:09, 6.64it/s]

reward: -5.2809, last reward: -4.5118, gradient norm: 5.366: 27%|██▋ | 166/625 [00:25<01:08, 6.65it/s]

reward: -5.1142, last reward: -4.5635, gradient norm: 5.04: 27%|██▋ | 166/625 [00:25<01:08, 6.65it/s]

reward: -5.1142, last reward: -4.5635, gradient norm: 5.04: 27%|██▋ | 167/625 [00:25<01:08, 6.64it/s]

reward: -5.1949, last reward: -4.2327, gradient norm: 4.982: 27%|██▋ | 167/625 [00:25<01:08, 6.64it/s]

reward: -5.1949, last reward: -4.2327, gradient norm: 4.982: 27%|██▋ | 168/625 [00:25<01:08, 6.65it/s]

reward: -5.0967, last reward: -5.0387, gradient norm: 7.457: 27%|██▋ | 168/625 [00:25<01:08, 6.65it/s]

reward: -5.0967, last reward: -5.0387, gradient norm: 7.457: 27%|██▋ | 169/625 [00:25<01:08, 6.65it/s]

reward: -5.0782, last reward: -5.2150, gradient norm: 10.54: 27%|██▋ | 169/625 [00:25<01:08, 6.65it/s]

reward: -5.0782, last reward: -5.2150, gradient norm: 10.54: 27%|██▋ | 170/625 [00:25<01:08, 6.65it/s]

reward: -4.5222, last reward: -4.3725, gradient norm: 22.63: 27%|██▋ | 170/625 [00:25<01:08, 6.65it/s]

reward: -4.5222, last reward: -4.3725, gradient norm: 22.63: 27%|██▋ | 171/625 [00:25<01:08, 6.64it/s]

reward: -3.9288, last reward: -3.9837, gradient norm: 83.59: 27%|██▋ | 171/625 [00:25<01:08, 6.64it/s]

reward: -3.9288, last reward: -3.9837, gradient norm: 83.59: 28%|██▊ | 172/625 [00:25<01:08, 6.63it/s]

reward: -4.1416, last reward: -4.1099, gradient norm: 30.57: 28%|██▊ | 172/625 [00:26<01:08, 6.63it/s]

reward: -4.1416, last reward: -4.1099, gradient norm: 30.57: 28%|██▊ | 173/625 [00:26<01:08, 6.62it/s]

reward: -4.8620, last reward: -6.8475, gradient norm: 18.91: 28%|██▊ | 173/625 [00:26<01:08, 6.62it/s]

reward: -4.8620, last reward: -6.8475, gradient norm: 18.91: 28%|██▊ | 174/625 [00:26<01:08, 6.62it/s]

reward: -5.1807, last reward: -6.4375, gradient norm: 18.48: 28%|██▊ | 174/625 [00:26<01:08, 6.62it/s]

reward: -5.1807, last reward: -6.4375, gradient norm: 18.48: 28%|██▊ | 175/625 [00:26<01:07, 6.63it/s]

reward: -5.1148, last reward: -5.0645, gradient norm: 14.36: 28%|██▊ | 175/625 [00:26<01:07, 6.63it/s]

reward: -5.1148, last reward: -5.0645, gradient norm: 14.36: 28%|██▊ | 176/625 [00:26<01:07, 6.62it/s]

reward: -5.2751, last reward: -4.8313, gradient norm: 15.32: 28%|██▊ | 176/625 [00:26<01:07, 6.62it/s]

reward: -5.2751, last reward: -4.8313, gradient norm: 15.32: 28%|██▊ | 177/625 [00:26<01:07, 6.63it/s]

reward: -4.9286, last reward: -6.9770, gradient norm: 24.75: 28%|██▊ | 177/625 [00:26<01:07, 6.63it/s]

reward: -4.9286, last reward: -6.9770, gradient norm: 24.75: 28%|██▊ | 178/625 [00:26<01:07, 6.62it/s]

reward: -4.5735, last reward: -5.2837, gradient norm: 15.2: 28%|██▊ | 178/625 [00:27<01:07, 6.62it/s]

reward: -4.5735, last reward: -5.2837, gradient norm: 15.2: 29%|██▊ | 179/625 [00:27<01:07, 6.63it/s]